You hit publish. You wait a few days. You search Google for your carefully crafted article—and nothing. Not on page one. Not on page ten. Not anywhere. You type in the exact title, and still, Google acts like your content doesn't exist.

If this sounds familiar, you're not alone. Thousands of content creators face this frustrating reality every week: great content that simply won't show up in Google's index. The good news? This isn't random bad luck or algorithmic punishment. In most cases, it's a specific, diagnosable problem with a clear solution.

This guide walks you through the seven most common reasons your content isn't appearing in Google—and more importantly, how to fix each one. Most of these issues can be resolved within days to weeks once you know what to look for. Let's start by understanding how Google actually finds and stores your content in the first place.

The Three-Stage Journey from Publish to Search Results

Here's what many content creators don't realize: publishing content on your website doesn't automatically mean Google will find it, store it, or show it in search results. Your content must successfully pass through three distinct stages before anyone can discover it through search.

Crawling: Google's automated bots (called Googlebot) must first discover your URL. They find new pages by following links from pages they already know about, checking sitemaps you've submitted, or receiving direct submission requests. If Googlebot never discovers your URL, it can't move to the next stage.

Indexing: Once discovered, Google processes your page—reading the content, analyzing the structure, and deciding whether to store it in their massive index. This is where many pages fail. Google might crawl your page but choose not to index it based on technical signals, content quality, or duplicate content concerns.

Ranking: Only after successful indexing does Google determine where your page should appear in search results. A page can be indexed but rank so poorly that you'll never find it through normal searches.

The critical insight? Your content can fail at any of these three stages. When you can't find your content in Google, it's usually stuck at crawling or indexing—not ranking. Understanding which stage is causing the problem completely changes how you approach the fix.

Checking Your Actual Indexing Status

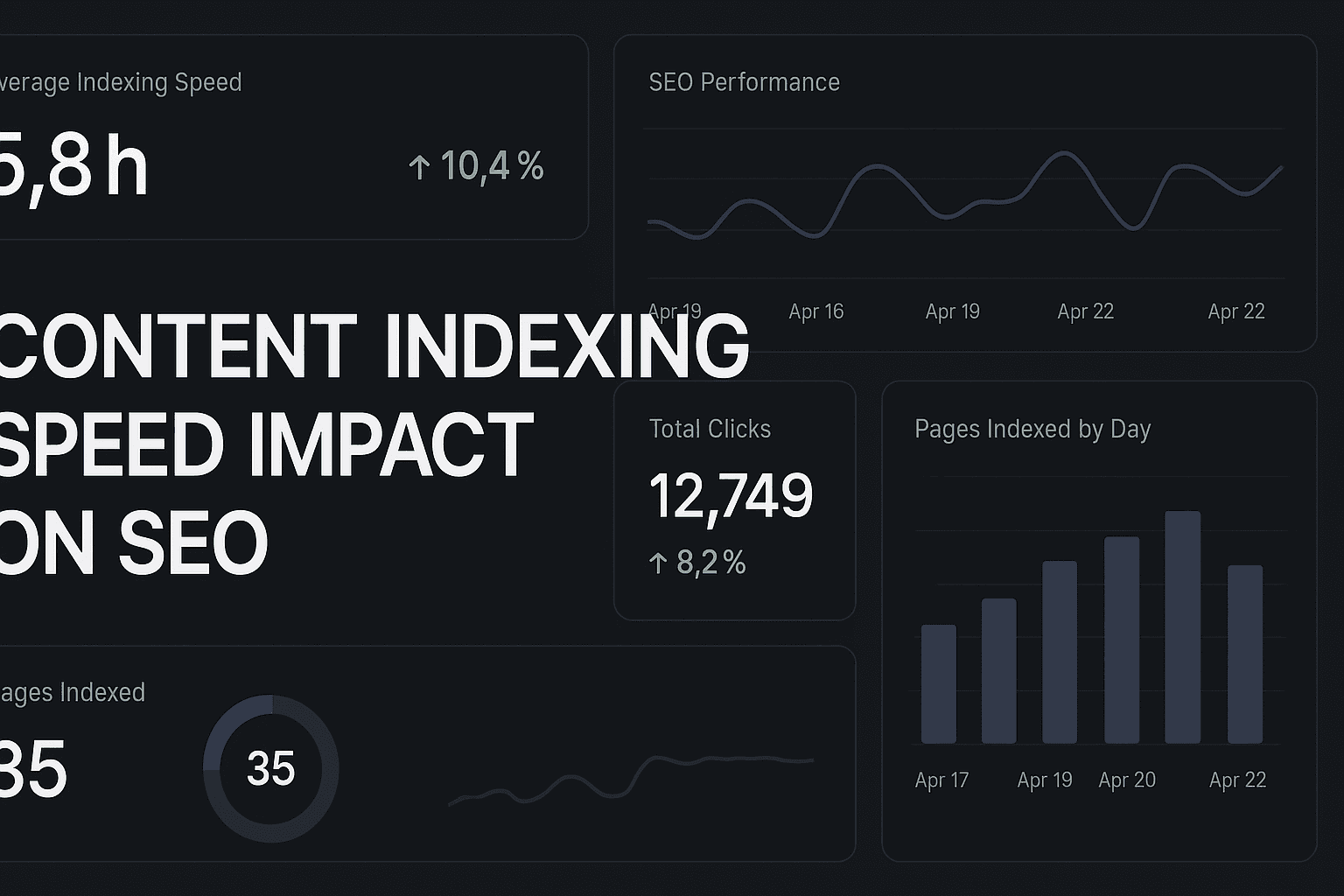

Before diagnosing problems, you need to know your content's current status. Google Search Console's URL Inspection tool gives you the definitive answer. Paste in your URL, and Google tells you exactly what's happening: whether the page has been crawled, whether it's indexed, and if there are any issues preventing indexing.

This tool is your starting point for every indexing investigation. It shows you Google's perspective on your page—not what you think should be happening, but what's actually occurring in Google's systems.

Technical Roadblocks That Stop Googlebot in Its Tracks

The most common reason content doesn't appear in Google? You've accidentally told Google not to index it. This happens more often than you'd think, especially on sites that have been through migrations, redesigns, or had multiple developers making changes over time.

Robots.txt Blocking: Your robots.txt file sits at your domain root and tells search engines which parts of your site they're allowed to crawl. One misplaced disallow rule can block entire sections of your site. Many sites accidentally block important content when trying to prevent crawling of admin pages or duplicate content.

Check your robots.txt file by visiting yourdomain.com/robots.txt. Look for any "Disallow" rules that might be blocking the URLs you want indexed. A common mistake is blocking entire directories when you meant to block specific file types or individual pages.

Noindex Meta Tags: The noindex directive is a powerful tool that explicitly tells search engines "do not index this page." It's useful for pages you don't want in search results—like thank-you pages or internal search results. But if it's on pages you do want indexed, it's a complete blocker.

These tags can appear in two places: in the HTML head section as a meta tag, or in the HTTP headers as an X-Robots-Tag. Both have the same effect. Developers sometimes add noindex tags to staging sites or during development, then forget to remove them before launch.

Canonical Tag Confusion: Canonical tags tell Google which version of a page is the "main" one when you have similar or duplicate content. They're essential for managing duplicate content issues. But when misconfigured, they can point Google away from the page you want indexed.

If your canonical tag points to a different URL—or worse, to a non-existent page—Google will try to index that URL instead of your actual content. This is especially common on sites using content management systems where canonical tags are auto-generated based on incorrect settings.

The fix for technical barriers costing you traffic is usually straightforward once identified. Remove the blocking robots.txt rule, delete the noindex tag, or correct the canonical URL. Then use Google Search Console to request re-indexing. In many cases, Google will index the page within days.

When Google Can't Find Your Content at All

Even without technical barriers, Google might simply never discover your content. This happens when pages are isolated from your site's link structure or when you're publishing faster than Google can keep up with your site.

The Crawl Budget Reality: Google doesn't crawl every page on every website every day. For large sites or frequent publishers, Google allocates a "crawl budget"—essentially, how many pages Googlebot will crawl on your site during a given timeframe. If you're publishing dozens of articles weekly on a site with thousands of existing pages, new content might wait in the discovery queue for weeks.

Crawl budget becomes a real constraint when your site has technical issues that waste crawling resources, when you have massive amounts of low-value content, or when your server response times are slow. Google will crawl fewer pages if the experience is inefficient.

Orphan Pages Without Internal Links: Here's a common scenario: you publish a new article, but you don't link to it from anywhere else on your site. It's not in your navigation, not in your sidebar, not linked from related articles. This creates an "orphan page"—content that exists on your site but has no pathway for discovery.

Googlebot primarily discovers new content by following links from pages it already knows about. If there's no link pathway to your new content, Googlebot might never find it organically. Even if you submit a sitemap, pages with strong internal linking get crawled and indexed much faster than orphaned content.

Sitemap Problems That Slow Discovery: XML sitemaps are your direct communication channel with Google, listing all the URLs you want indexed. But many sites have sitemap issues that undermine this process: outdated sitemaps that don't include new content, sitemaps that haven't been submitted to Google Search Console, or sitemaps with errors that prevent proper processing.

Your sitemap should be automatically updated whenever you publish new content. If you're manually updating sitemaps or relying on plugins that don't trigger updates properly, new content won't be included in the sitemap Google checks regularly.

The solution involves creating clear pathways for discovery. Build internal links from high-authority pages on your site to new content. Ensure your sitemap is automatically updated and properly submitted. For sites with crawl budget constraints, focus on improving site speed and eliminating crawl-wasting elements like infinite scroll pagination or excessive parameters.

When Google Crawls But Chooses Not to Index

This is where it gets tricky. Google finds your page, crawls it successfully, but makes a deliberate decision not to include it in their index. The URL Inspection tool will show "Crawled - currently not indexed" or similar status messages. This usually points to content quality concerns.

Thin Content That Doesn't Meet the Bar: Google has billions of pages in their index. They're selective about what they add. If your content is too short, lacks substantive information, or doesn't provide clear value beyond what already exists in their index, Google might crawl it but choose not to store it.

What counts as "thin" varies by topic and search intent. A 300-word product description might be perfectly adequate for a specific product, while a 300-word guide on a complex topic would be considered insufficient. Google evaluates whether your content satisfies the likely intent behind searches that might lead to that page.

Duplicate or Near-Duplicate Content Issues: If Google already has very similar content in their index—either from your own site or from other sites—they might skip indexing your version. This doesn't necessarily mean you copied content. It can happen with product descriptions that match manufacturer content, location-based pages with similar templates, or multiple articles covering the same topic in similar ways.

Google's goal is to show diverse, unique results. If your content doesn't add anything new to what they already have indexed, they see little reason to include it. This is particularly common for sites that generate large volumes of templated content or aggregate information from other sources without adding unique insights.

The Helpful Content System Factor: Google's helpful content system, refined throughout 2024 and into 2025, specifically targets content created primarily for search engines rather than people. If your site has patterns that suggest content is being mass-produced for SEO purposes—like covering trending topics without expertise, creating content in areas outside your site's focus, or producing content that reads like it was written to manipulate rankings—Google might be reluctant to index new pages.

This system operates at both the page and site level. If Google determines your site has a high proportion of unhelpful content, even genuinely good new content might face indexing delays or rejection. The algorithm looks for signals like whether content demonstrates first-hand expertise, whether it provides original information or insights, and whether it would be useful to someone arriving from search. Understanding AI generated content SEO performance can help you navigate these quality thresholds.

Diagnosing Content Quality Issues

Check Google Search Console's Index Coverage report for patterns. If you see many pages marked "Crawled - currently not indexed," content quality is likely the issue. Compare the characteristics of pages that do get indexed versus those that don't. Look for differences in length, depth, uniqueness, and value.

The fix requires substantive content improvement. Expand thin content with detailed information, examples, and unique insights. Consolidate duplicate or near-duplicate pages into comprehensive resources. Demonstrate expertise and first-hand experience in your content. Focus on creating content that genuinely helps your target audience rather than content designed primarily to rank for keywords. Following proven SEO content writing tips can significantly improve your indexing success rate.

Taking Control: Proactive Indexing Strategies

Waiting for Google to naturally discover and index your content can take weeks or months. Fortunately, you can accelerate the process with proactive submission methods that put your content directly in front of search engines.

Manual Indexing Requests Through Search Console: Google Search Console's URL Inspection tool isn't just for checking status—it's also your direct line to request indexing. After inspecting a URL, you can click "Request Indexing" to ask Google to prioritize crawling and indexing that specific page.

This doesn't guarantee immediate indexing, but it moves your URL to the front of Google's crawl queue. For important new content or recently fixed pages, this can reduce indexing time from weeks to days. The limitation is that you can only request indexing for a limited number of URLs per day, so prioritize your most important content.

IndexNow for Instant Multi-Engine Submission: The IndexNow protocol, adopted by Bing and Yandex, allows you to notify search engines immediately when you publish or update content. Instead of waiting for search engines to discover changes through regular crawling, you send a direct notification with the URL that changed.

This is particularly valuable for time-sensitive content or high-volume publishers. When you publish breaking news, product launches, or trending topic coverage, IndexNow ensures search engines know about it within minutes rather than hours or days. While Google hasn't officially adopted IndexNow, getting indexed quickly in Bing and other search engines still drives valuable traffic.

Strategic Internal Linking for Natural Discovery: The fastest path to indexing is often the most organic: building strong internal link pathways from already-indexed, frequently-crawled pages to your new content. When Googlebot visits your homepage or popular articles, it follows links to discover new pages.

Link to new content from your homepage, from related articles, from category pages, and from your navigation structure. The more pathways that lead to new content, and the more frequently Googlebot crawls the pages that link to it, the faster discovery happens. This is why major news sites get new articles indexed within minutes—they have strong internal linking structures that Googlebot crawls constantly.

Combine these approaches for maximum effect. When you publish important content, add internal links from high-traffic pages, submit your updated sitemap, use IndexNow for immediate notification to supporting search engines, and request indexing through Google Search Console. This multi-pronged approach dramatically reduces the time from publication to indexing.

Building a Sustainable Indexing Monitoring System

Fixing current indexing issues is important. Preventing future issues is even better. As you scale content production, you need systems that catch indexing problems before they become major obstacles to organic traffic growth.

Regular Index Coverage Audits: Set a recurring calendar reminder to review Google Search Console's Index Coverage report. Weekly checks work well for sites publishing frequently; monthly checks suffice for slower-publishing sites. Look for sudden increases in excluded pages, new error types, or changes in the valid-to-excluded ratio.

The Index Coverage report categorizes every URL Google knows about on your site into four buckets: valid (successfully indexed), valid with warnings (indexed but with issues), excluded (crawled but not indexed, with reasons), and error (couldn't be indexed due to technical problems). Understanding these categories helps you prioritize fixes.

Understanding Exclusion Reasons: Not all excluded pages are problems. Google might legitimately exclude pages you've marked as noindex, pages blocked by robots.txt, or duplicate pages where you've specified a canonical URL pointing elsewhere. These are "working as intended" exclusions.

The exclusions that need attention are "Crawled - currently not indexed," "Discovered - currently not indexed," and "Alternate page with proper canonical tag" when you didn't intend that page to be an alternate. These indicate Google found your content but chose not to include it in their index for quality or technical reasons. Learning why content takes long to index helps you set realistic expectations and identify genuine problems.

Creating an Indexing Workflow: As you scale content production, manual checking becomes unsustainable. Build a workflow that automatically monitors indexing status and alerts you to problems. This might involve connecting Google Search Console API to your analytics dashboard, setting up automated reports, or using SEO content tools that track indexing status across your entire content library.

For teams publishing multiple articles daily, knowing which pieces successfully indexed and which didn't becomes critical business intelligence. You can identify patterns—perhaps certain content types consistently face indexing issues, or specific authors need additional training on content quality standards. This data informs both immediate fixes and long-term content strategy.

The goal is making indexing monitoring a routine part of your content operations rather than a reactive troubleshooting exercise. When you catch indexing issues within days rather than months, fixes are faster and you lose less potential traffic to the indexing gap.

Moving Forward: Making Indexing a Competitive Advantage

Content that doesn't appear in Google search results might as well not exist. But as you've seen throughout this guide, indexing failures are almost always fixable once you identify the specific blocker. The diagnostic approach is straightforward: start with technical barriers, then examine crawlability and site architecture, then evaluate content quality.

Most indexing issues fall into predictable categories. You've either accidentally told Google not to index your content through technical directives, or Google can't find your content due to poor site architecture, or Google found your content but chose not to index it due to quality concerns. Each category has clear diagnostic tools and proven solutions.

The key mindset shift is treating indexing as an ongoing process rather than a one-time task. As you scale SEO content production, indexing monitoring becomes as important as keyword research or content creation itself. You can create the world's best content, but if it's not indexed, it generates zero organic traffic.

This becomes even more critical as the search landscape evolves beyond traditional search engines. AI models like ChatGPT, Claude, and Perplexity are increasingly shaping how people discover information and brands. These systems rely on indexed, accessible content to form their responses and recommendations. Learning to optimize content for AI search ensures your content remains visible across all discovery channels. If your content isn't indexed, you're invisible not just in Google search results, but across the entire AI-powered discovery ecosystem.

Stop guessing how AI models like ChatGPT and Claude talk about your brand—get visibility into every mention, track content opportunities, and automate your path to organic traffic growth. Start tracking your AI visibility today and see exactly where your brand appears across top AI platforms.