You're staring at your analytics dashboard at 2 AM, and something doesn't add up. Your brand gets mentioned consistently when prospects ask ChatGPT for recommendations in your category. But when those same questions go to Claude or Perplexity? Radio silence. Your competitor, meanwhile, dominates those platforms while barely showing up in ChatGPT responses.

This isn't a coincidence. It's a pattern.

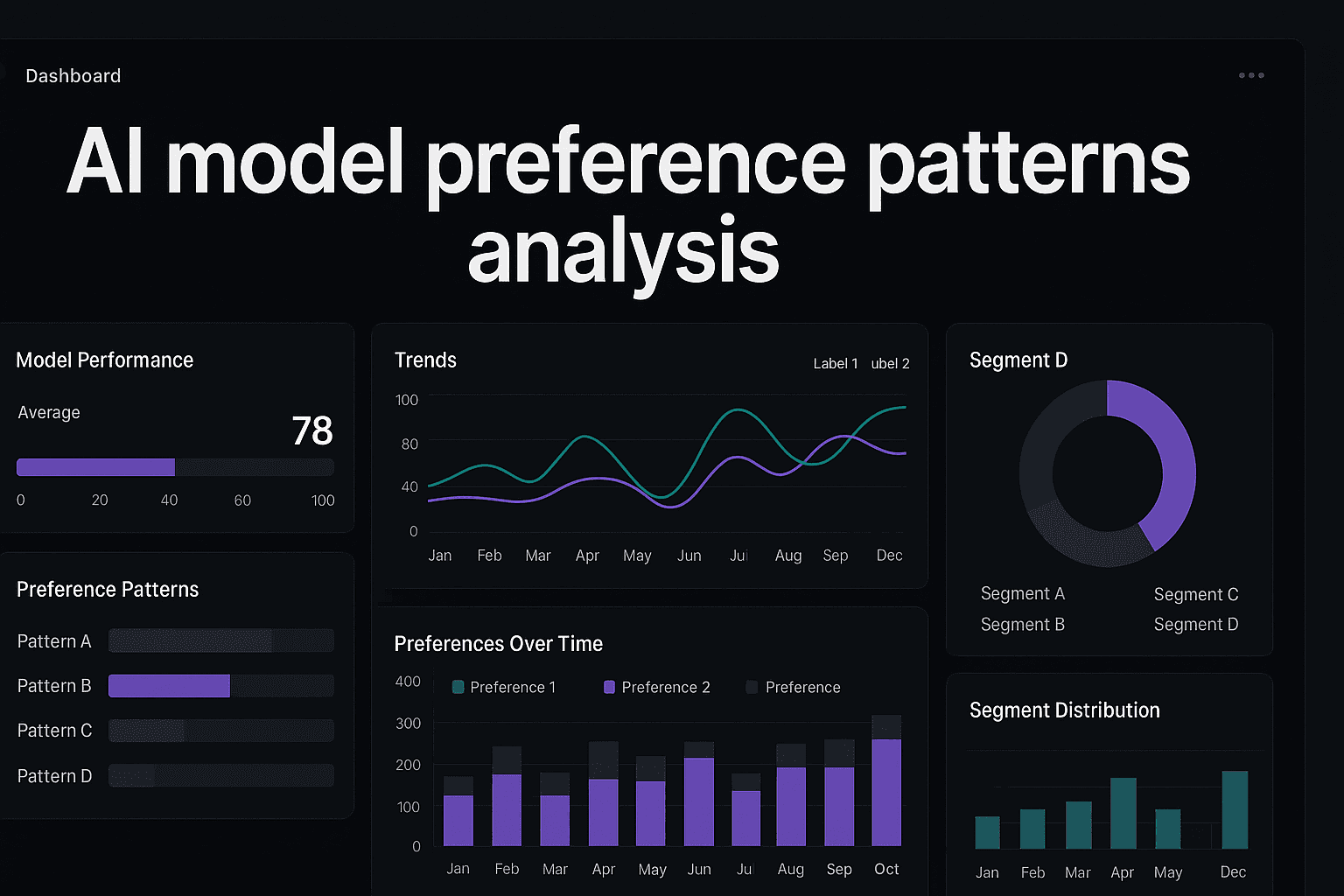

AI models don't recommend products and services randomly. Each model has developed distinct preferences—systematic tendencies in how they select sources, prioritize information, and present recommendations. These preferences are as predictable as consumer shopping habits. Once you understand them, you can measure them, track them, and optimize for them.

The problem? Most businesses have no idea these patterns exist. They're investing heavily in traditional SEO while remaining completely blind to how AI models actually recommend their solutions. This creates a massive visibility gap where companies may rank well in Google but be invisible in the AI-powered recommendations that increasingly drive purchase decisions.

Think about it: When your ideal customer asks an AI assistant for software recommendations, project management tools, or marketing solutions, does your brand appear in that response? More importantly, does it appear consistently across different AI models? If you don't know the answer, you're flying blind in the fastest-growing discovery channel in digital marketing.

The competitive advantage goes to businesses that recognize these preference patterns early. While your competitors treat AI visibility as a mystery, you can approach it as measurable business intelligence. The patterns are there. The data exists. The question is whether you'll analyze it before your competition does.

Here's everything you need to know about AI model preference patterns analysis—what these patterns are, why they matter to your bottom line, how AI models develop their distinct "recommendation DNA," and most importantly, how to systematically analyze and leverage these patterns for competitive advantage. By the end, you'll understand exactly how to identify preference patterns, avoid costly analysis mistakes, and build a sustainable framework for optimizing your AI visibility across every major model.

The AI recommendation landscape isn't random. It's predictable. And that predictability is your opportunity.

What Are AI Model Preference Patterns?

AI model preference patterns are the systematic tendencies that different AI models exhibit when selecting and presenting information in their responses. Think of them as each model's unique "recommendation fingerprint"—consistent behaviors in how they prioritize sources, structure answers, and choose which brands or solutions to mention.

These patterns aren't random quirks. They're the result of each model's training data, architectural decisions, and optimization objectives. ChatGPT might consistently favor certain types of authoritative sources. Claude might show preference for detailed technical documentation. Perplexity might prioritize recent content over established resources. Understanding these patterns through ai brand monitoring helps you predict and influence how each model will represent your brand.

The patterns manifest across multiple dimensions. Source preference patterns determine which websites and domains a model trusts and cites frequently. Content format patterns reveal whether a model favors long-form articles, technical documentation, or concise summaries. Recency patterns show how much weight a model places on publication dates versus established authority. Citation patterns indicate how models attribute information and link to sources.

What makes these patterns particularly valuable is their consistency. Once you identify that a specific model consistently prioritizes certain content characteristics, you can optimize your content strategy accordingly. This isn't about gaming the system—it's about understanding each model's information quality signals and ensuring your content aligns with them.

The business impact is substantial. When you understand preference patterns, you can predict which content will gain visibility in AI responses, identify gaps in your current strategy, and allocate resources to the highest-impact optimizations. You move from reactive content creation to strategic positioning based on measurable model behaviors.

Why AI Model Preferences Matter for Your Business

The shift from search engines to AI assistants represents a fundamental change in how customers discover and evaluate solutions. When someone asks ChatGPT for project management software recommendations, they're not clicking through ten blue links—they're receiving a curated list of 3-5 options with explanations. If your product isn't in that list, you don't exist in that buying journey.

Traditional SEO metrics don't capture this new reality. You might rank #1 in Google for "marketing automation software" but never appear in AI assistant recommendations for the same query. This creates a dangerous blind spot where businesses invest heavily in visibility channels that matter less while ignoring the channels that increasingly drive purchase decisions.

The competitive dynamics are shifting rapidly. Early movers who understand and optimize for AI model preferences are establishing dominant positions in AI-powered recommendations. Meanwhile, companies that ignore these patterns are losing visibility in the fastest-growing discovery channel, often without realizing it until competitors have established insurmountable advantages.

Consider the customer journey implications. When prospects use AI assistants for research, they're typically earlier in the buying process and more open to discovering new solutions. Being present in these AI-powered discovery moments means reaching customers before they've formed strong preferences. Miss these moments, and you're fighting an uphill battle against competitors who were recommended by their trusted AI assistant.

The data tells a clear story. Businesses that systematically analyze and optimize for AI model preferences see measurable improvements in brand mentions, recommendation frequency, and ultimately, qualified leads from AI-powered discovery. This isn't theoretical—it's happening now, and the gap between leaders and laggards grows wider each quarter.

How Different AI Models Develop Distinct Preferences

AI models develop their preference patterns through a combination of training data, architectural choices, and post-training optimization. Understanding these factors helps you predict and influence model behavior rather than treating it as a black box.

Training data composition plays a foundational role. Models trained heavily on academic papers might show preference for scholarly sources and formal citation styles. Models trained on diverse web content might favor practical, accessible explanations. The ratio of different content types in training data creates baseline preferences that persist even after additional fine-tuning.

Architectural decisions shape how models process and prioritize information. Some models use retrieval-augmented generation (RAG) that actively searches current web content, making them more sensitive to recency and real-time information. Others rely primarily on their training data, giving more weight to established, authoritative sources that were well-represented during training.

Post-training optimization through reinforcement learning from human feedback (RLHF) further refines these preferences. When human evaluators consistently rate certain types of responses higher, the model learns to favor content characteristics that produce those responses. This creates preference patterns around content structure, citation style, and information presentation that vary between models based on their specific optimization objectives.

The result is that each major AI model develops a distinct "personality" in how it selects and presents information. These personalities aren't arbitrary—they're the product of deliberate design choices and optimization processes. By analyzing these patterns through ai content strategy frameworks, you can reverse-engineer what each model values and optimize accordingly.

Key Metrics for Analyzing AI Model Preferences

Effective preference pattern analysis requires tracking specific, measurable metrics that reveal model behavior. These metrics form the foundation of data-driven optimization rather than guesswork.

Mention frequency is your primary visibility metric. Track how often your brand, products, or content appear in AI responses across different query types and models. This baseline metric reveals which models currently favor your content and which represent visibility gaps. Consistent tracking over time shows whether your optimization efforts are working.

Position in recommendations matters as much as presence. Being mentioned fifth in a list of ten options has dramatically different business impact than being the first recommendation. Track your average position across different query categories and models to identify where you're gaining or losing ground relative to competitors.

Citation patterns reveal how models attribute and link to your content. Some models might mention your brand but cite competitor sources. Others might link directly to your content. Understanding these patterns helps you optimize for the specific citation behaviors of each model, which you can track through ai mention tracking software.

Context and framing analysis examines how models describe your brand when they do mention it. Are you positioned as an established leader, an innovative newcomer, or a budget option? This qualitative metric reveals whether models understand your positioning and whether that understanding aligns with your brand strategy.

Query category performance shows which types of questions trigger mentions of your brand. You might dominate responses to feature-specific queries but be absent from broader category questions. This metric helps you identify content gaps and optimization opportunities across different query intents.

Tools and Methods for Tracking Preference Patterns

Systematic preference pattern analysis requires the right combination of tools and methodologies. Manual spot-checking isn't sufficient—you need scalable, repeatable processes that generate reliable data.

Automated query testing forms the foundation of preference pattern analysis. Build a comprehensive set of test queries that represent how your target customers actually use AI assistants. These queries should span different intents (informational, comparison, recommendation), specificity levels (broad category to specific features), and customer journey stages (awareness, consideration, decision).

Multi-model testing infrastructure allows you to run the same queries across ChatGPT, Claude, Perplexity, and other relevant models simultaneously. This parallel testing reveals preference differences between models and helps you identify which models to prioritize based on your visibility gaps. Using ai brand visibility tools can automate this cross-model comparison.

Stop guessing how AI models like ChatGPT and Claude talk about your brand—get visibility into every mention, track content opportunities, and automate your path to organic traffic growth. Start tracking your AI visibility today and see exactly where your brand appears across top AI platforms.