You're staring at your content calendar at 11 PM on a Sunday, and the math isn't adding up. Your team can realistically produce maybe three solid articles per week—if everything goes perfectly. Meanwhile, your competitors are publishing daily, your SEO rankings are stagnating, and now you're hearing that ChatGPT and Claude are recommending competitor content to potential customers who never even make it to Google.

The content bottleneck isn't just slowing your growth anymore. It's actively costing you market share.

Here's what's changed in 2026: Content discovery happens in two parallel universes now. There's traditional search—Google, Bing, the usual suspects. But increasingly, your potential customers are asking AI models for recommendations, and those models are citing sources you've never heard of while completely ignoring your carefully optimized blog posts. You need content that performs in both worlds, and you need a lot of it.

The manual approach—brainstorming topics, assigning writers, multiple revision rounds, SEO optimization, publication—simply can't scale to meet this dual demand. Even if you doubled your content team tomorrow, you'd still be playing catch-up while burning through budget.

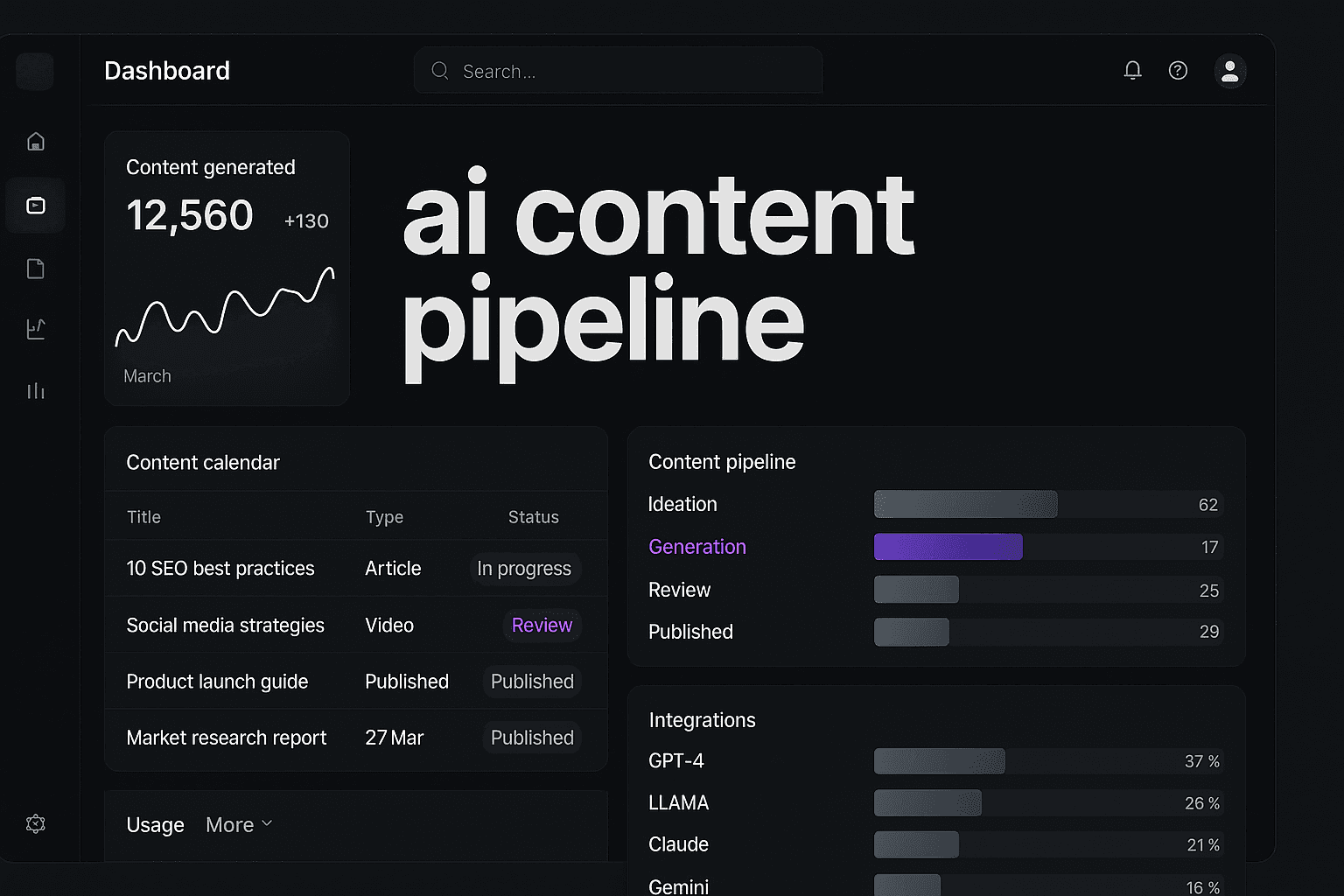

This is where AI content pipelines change everything. Not just "AI writing tools" that spit out generic drafts you still need to heavily edit. We're talking about complete automation systems that handle everything from strategic topic discovery to publication, optimized for both search engines and AI model recommendations, producing content at 10x your current volume while maintaining—or even improving—quality.

The difference between companies thriving in 2026 and those struggling often comes down to whether they've built these systems or are still relying on manual processes designed for a different era of content marketing.

Let's walk through how to build this system step-by-step, starting with the foundation that makes everything else possible.

Scaling Your Pipeline and Measuring What Actually Matters

You've built your AI content pipeline. It's running. Articles are publishing. Now comes the question that keeps executives up at night: "Is this actually working, and can we scale it without everything falling apart?"

Here's the reality most companies discover around week six: Getting to 20 articles per month feels manageable. Scaling to 100+ per month while maintaining quality? That's where most pipelines either evolve into genuine competitive advantages or collapse under their own weight.

Scaling Without Breaking Your Quality Standards

The first bottleneck you'll hit isn't technical—it's psychological. Your team will panic as volume increases, convinced that quality must be suffering. This is where performance monitoring becomes critical, not just for catching problems, but for proving that your quality metrics are actually holding steady or improving.

Start by establishing automated quality gates that flag content before it publishes. Set thresholds for readability scores, fact-checking confidence levels, brand voice consistency, and citation accuracy. When a piece fails any gate, it routes to human review rather than auto-publishing. This prevents the "garbage at scale" problem while keeping your team focused on exceptions rather than reviewing every single article.

Your infrastructure needs to evolve as volume increases. Successfully scaling your pipeline means extending automation beyond content creation to implement comprehensive ai content marketing strategies across distribution channels, promotion workflows, and performance tracking. What worked for 20 articles monthly will choke at 100.

Multi-channel distribution becomes non-negotiable at scale. Your pipeline should automatically publish to your CMS, distribute to social channels, trigger email campaigns, and update content hubs—all without manual intervention. Each distribution channel needs its own optimization rules and formatting requirements built into your automation.

Team structure shifts dramatically too. At 20 articles monthly, one content strategist can oversee everything. At 100+, you need specialized roles: topic strategists who feed the pipeline, quality analysts who monitor output, optimization specialists who tune performance, and technical operators who maintain the infrastructure. Plan for one full-time team member per 50 articles monthly as a baseline.

Measuring Success in the Dual-Optimization Era

Traditional content metrics tell half the story now. Yes, track your search rankings, organic traffic, and conversion rates—those still matter. But you're missing the bigger picture if you're not measuring AI model performance.

Start tracking AI model mentions and citation frequency. How often do ChatGPT, Claude, and Perplexity recommend your content when users ask relevant questions? This requires specialized ai brand monitoring tools that query AI models systematically and track when your brand appears in responses. Set a baseline in month one, then track monthly improvement.

Your ROI calculation needs to account for both time savings and capability expansion. Calculate your previous cost per article (writer time, editor time, SEO specialist time, overhead). Compare that to your per-article cost with the pipeline running (tool costs, oversight time, infrastructure). Most companies see 60-70% cost reduction while producing 5-10x more content—but the real value is in content you simply couldn't produce manually.

Brand authority metrics become increasingly important. Track your share of voice in AI model responses compared to competitors. Monitor how often your content gets cited as a primary source versus a secondary reference.

Step 2: AI Agent Workflow Architecture

Here's where most AI content pipelines either become genuine competitive advantages or expensive experiments that produce mediocre content at scale. The difference? How you architect your agent workflows.

Think of this like assembling a specialized team where each member has one job they do exceptionally well. You wouldn't ask your best researcher to also handle final edits, right? Same principle applies to AI agents—but most companies make the mistake of using a single AI tool to handle everything from research to publication.

Multi-Agent Team Configuration

Your pipeline needs at least four distinct agent types, each optimized for specific tasks. Start with a research agent that excels at data gathering and fact verification. This agent should connect to your knowledge base, competitor content, and industry data sources to build comprehensive research briefs.

Next comes your writing agent—but here's the critical part: you need different writing agents for different content types. A technical guide requires different capabilities than a thought leadership piece. Configure separate agents with distinct training for how-to content, explainers, and opinion pieces. Seamless agent orchestration requires a robust content generation api that enables different specialized agents to share data, maintain context, and coordinate handoffs without manual intervention.

Your optimization agent handles the dual mandate of SEO and GEO. This agent analyzes content in real-time, suggesting semantic keyword integration, citation formatting, and structure adjustments that satisfy both search algorithms and AI model preferences. Don't skip this—it's the difference between content that ranks and content that gets recommended.

Finally, implement a quality control agent that runs automated checks before publication. This agent verifies factual accuracy, checks brand voice consistency, flags readability issues, and ensures all optimization requirements are met. Set hard thresholds—if content scores below your standards, it loops back to the appropriate agent for revision.

Workflow Automation and Quality Gates

Building an effective ai content workflow means establishing clear checkpoints, automated quality gates, and feedback loops that catch issues before they compound across your pipeline.

Create decision points between each agent handoff. When your research agent completes its work, the system should automatically verify that all required data points are present before passing to the writing agent. Missing competitor analysis? Loop back to research. Insufficient citation sources? Same thing.

The biggest mistake companies make is assuming automation means "set it and forget it." Your workflow needs continuous monitoring. Set up alerts for when content gets stuck at a particular gate, when quality scores drop below thresholds, or when processing times exceed normal ranges. These signals tell you where your workflow needs adjustment.

Here's a practical tip that saves countless headaches: Build in a "human review trigger" for edge cases. If content fails quality gates twice, flag it for manual review rather than letting agents loop indefinitely. This prevents your pipeline from wasting resources on content that needs human intervention.

Your workflow should also include performance feedback loops. Track which agent configurations produce the highest quality scores, fastest processing times, and best engagement metrics. Use this data to continuously refine your agent instructions and workflow rules. The pipeline that works well at 20 articles per month needs different optimization than one producing 200.

Building Your AI Content Foundation

Before you can automate anything, you need infrastructure that won't collapse under the weight of high-volume content production. Most AI content initiatives fail in the first month not because the AI isn't capable, but because the foundation wasn't built to handle what comes next.

Think of it like building a house. You wouldn't start hanging drywall before pouring the foundation, right? Same principle here. Your pipeline needs systems that support both traditional SEO optimization and the newer challenge of getting cited by AI models like ChatGPT and Claude.

Essential Infrastructure and Tool Selection

Your tool stack determines whether your pipeline hums along smoothly or becomes a Frankenstein's monster of disconnected systems that require constant manual intervention.

Start with a content management system that has robust API capabilities. WordPress with headless CMS functionality works. Webflow with proper integrations works. What doesn't work is a CMS that requires manual uploads and formatting for every single piece. The infrastructure you select must support bulk content creation at scale, handling everything from content brief generation to multi-channel distribution.

Next, you need AI platforms with multi-agent support. Single-AI tools like basic ChatGPT or Claude won't cut it here. You need systems where different specialized agents can handle research, writing, optimization, and quality control as distinct functions. Look for platforms that allow agent orchestration—where one agent's output becomes another agent's input automatically.

Analytics tools require an upgrade too. Google Analytics and Search Console are table stakes, but you also need AI visibility tracking. Tools that monitor whether ChatGPT, Claude, or Perplexity are citing your content. Traditional SEO tools won't show you this data, and it's becoming increasingly critical for understanding your actual reach.

Critical Integration Points: Your CMS needs to talk to your AI platform. Your AI platform needs to feed data to your analytics. Your analytics need to inform your content strategy. If any of these connections require manual data transfer, you've got a bottleneck waiting to happen.

Budget Reality Check: Expect to invest in 3-5 core platforms. A capable ai blog writing software that can produce comprehensive guides, technical documentation, and thought leadership pieces that meet the depth requirements for both SEO rankings and AI model citations typically runs $200-500/month. Add your CMS, analytics, and orchestration tools, and you're looking at $500-1,000 monthly for a professional setup.

The Testing Phase: Before committing to annual contracts, run 30-day trials of everything. Create one complete piece of content through your proposed pipeline. If it takes more than 4 hours of human time from brief to publication, something in your stack isn't working.

Strategic Planning and Team Preparation

Here's what catches most teams off guard: AI content pipelines don't eliminate human involvement—they transform it. Your team shifts from content creators to content strategists and quality controllers. That's a significant mindset change, and it needs to happen before you flip the automation switch.

Define roles clearly. You need someone who understands content strategy and can guide topic selection, keyword targeting, and competitive positioning. This person feeds the pipeline with strategic direction, not individual article assignments. They're thinking about content clusters, topical authority, and how each piece fits into your broader marketing objectives.

You also need an AI operations specialist who understands prompt engineering, agent configuration, and workflow troubleshooting. This isn't a traditional content role—it's more technical, requiring comfort with APIs, automation platforms, and debugging when things break. Many companies try to add this to an existing marketer's plate and wonder why their pipeline never gets off the ground.

Quality control becomes a distinct function too. Someone needs to review output samples, maintain brand voice guidelines, and catch edge cases where AI misses the mark. This person isn't editing every article—they're spot-checking, identifying patterns in quality issues, and refining agent instructions to prevent future problems.

Building Your AI Content Foundation

Before you can automate anything, you need the right infrastructure in place. Most AI content initiatives fail not because the technology isn't capable, but because teams try to build pipelines on shaky foundations—mixing incompatible tools, skipping critical setup steps, or underestimating the human oversight required even in automated systems.

Think of this phase as building the engine before you start the car. Rush it, and you'll spend months troubleshooting problems that could have been prevented in week one.

Essential Infrastructure and Tool Selection

Your tool stack determines whether your pipeline hums along smoothly or constantly breaks down. The infrastructure you select must handle everything from content brief generation to multi-channel distribution without manual intervention at every step.

Start with your content management system. You need a CMS with robust API capabilities—not just for publishing, but for retrieving data, managing workflows, and integrating with external tools. WordPress with REST API, Webflow with API access, or headless CMS platforms like Contentful all work, but they must support programmatic content operations.

Next, your AI platform selection matters more than most teams realize. You're not looking for a single AI writing tool—you need a platform that supports multi-agent orchestration. This means the ability to run multiple specialized AI models simultaneously, pass data between them, and maintain context across different stages of content creation. Your pipeline must include a capable system that can produce comprehensive guides, technical documentation, and thought leadership pieces that meet the depth requirements for both SEO rankings and AI model citations.

For analytics, you need dual tracking capabilities. Traditional tools like Google Analytics and Search Console handle SEO metrics, but you also need systems that monitor AI model mentions and citations. This is where specialized ai brand visibility tools become essential—they show you when ChatGPT, Claude, or Perplexity recommend your content, which traditional analytics completely miss.

Critical Tool Categories: Content management system with API access, multi-agent AI platform, traditional SEO analytics, GEO tracking system, workflow automation platform (Zapier, Make, or n8n), project management tool with API integration, version control system, and automated quality checking tools.

The integration between these tools is what makes or breaks your pipeline. Each tool needs to communicate with the others without manual data transfer. If you're copying and pasting between platforms, your foundation isn't ready for automation.

Strategic Planning and Team Preparation

Here's the uncomfortable truth: AI doesn't eliminate the need for human expertise—it amplifies it. Your pipeline will only be as good as the humans who design the workflows, set the quality standards, and handle the exceptions that automation can't manage.

Define clear roles before you build anything. You need a content strategist who understands both SEO and GEO optimization—someone who can identify topics that perform in both traditional search and AI model recommendations. You need an AI operations specialist who understands prompt engineering, agent orchestration, and troubleshooting when workflows break. And you need quality control reviewers who can spot when ai blog content misses the mark, even if it technically meets your quality thresholds.

Stop guessing how AI models like ChatGPT and Claude talk about your brand—get visibility into every mention, track content opportunities, and automate your path to organic traffic growth. Start tracking your AI visibility today and see exactly where your brand appears across top AI platforms.