Your competitor just got recommended by ChatGPT to 50,000 users asking for solutions in your category. You got mentioned zero times. And you have no idea it happened.

This isn't a hypothetical scenario. It's happening right now, thousands of times per day, across every industry. While you're optimizing meta descriptions and tracking Google rankings, potential customers are asking AI models for recommendations—and those models are either mentioning your brand or they're not.

The problem? Traditional analytics can't see any of this. Google Analytics shows you website visitors. Search Console shows you rankings. But when someone asks Claude "What's the best project management tool for remote teams?" and gets a detailed recommendation that never mentions your product, that interaction leaves zero trace in your marketing dashboard.

This is the new "dark social" of marketing—a massive influence channel that's completely invisible to conventional measurement tools. Just like the early days of Google SEO, when smart brands gained unfair advantages by tracking and optimizing for search visibility, today's marketing leaders are building systematic approaches to measure and improve their AI visibility before their competitors even realize it matters.

The stakes are higher than you might think. AI models don't send users to your website to research options. They provide direct answers and recommendations. When your brand isn't mentioned, you don't just lose a click—you lose the customer entirely. They never reach your site. They never enter your funnel. They choose a competitor without ever knowing you exist.

But here's the good news: AI visibility is measurable, trackable, and improvable. Unlike the black box of traditional search algorithms, you can systematically test how AI models respond to queries in your category. You can document when your brand appears, how it's positioned, and what context surrounds those mentions. You can track competitive dynamics and identify specific opportunities to improve your visibility.

The brands that master AI visibility measurement now will dominate their categories in the AI-driven search era. The ones that ignore it will wonder why their traditional SEO success isn't translating into customer growth anymore.

This guide walks you through exactly how to measure your AI visibility—from establishing your current baseline to implementing sophisticated tracking systems that reveal competitive advantages your rivals are missing. You'll learn which metrics matter, how to collect them systematically, and how to turn raw AI responses into actionable business intelligence.

Let's walk through how to do this step-by-step.

Step 1: Establish Your Current AI Visibility Baseline

You can't improve what you don't measure. Before you build sophisticated tracking systems or optimize your AI presence, you need to know exactly where you stand right now. This baseline becomes your reference point for everything that follows—the benchmark that proves whether your optimization efforts are actually working.

Think of this like a brand audit, but instead of surveying customers or analyzing social media sentiment, you're systematically testing how AI models respond to queries in your category. The goal isn't perfection—it's documentation. You're creating a snapshot of your current AI visibility that you'll compare against future measurements to track progress.

Systematic Brand Mention Testing

Start by testing five distinct query categories that represent different ways potential customers might discover your brand through AI interactions. Each category reveals different aspects of your visibility profile.

Direct Brand Queries: Test how AI models respond when users explicitly ask about your brand. Try prompts like "What is [your company]?" or "Tell me about [your product]." This establishes whether AI models have basic awareness of your brand and how accurately they describe your offering.

Category-Based Queries: Test broader category searches where your brand should appear alongside competitors. Use prompts like "What are the best [your category] tools?" or "Top [your category] solutions for 2026." This reveals whether AI models consider you a relevant player in your category.

Problem-Solution Queries: Test how AI responds when users describe problems your product solves without mentioning any brands. Try "How do I [solve specific problem]?" or "What's the best way to [achieve specific outcome]?" Understanding how to track ai recommendations systematically across different query categories ensures you capture not just mentions, but actual recommendation patterns that drive customer decisions.

Comparison Queries: Test how your brand appears in competitive contexts. Use prompts like "Compare [your brand] vs [competitor]" or "Alternatives to [major competitor]." This shows whether AI models position you as a viable alternative to established players.

Recommendation Requests: Test direct recommendation scenarios. Try "What [category] tool should I use for [specific use case]?" or "Recommend a [category] solution for [specific situation]." This is the highest-value visibility—when AI proactively recommends your brand without prompting.

For each query type, test the same prompt 3-5 times across different sessions. AI model responses can vary based on numerous factors, and you need to understand whether your visibility is consistent or sporadic. Document not just whether your brand appears, but where it appears in the response, what context surrounds the mention, and how the AI describes your offering.

Create a simple spreadsheet with columns for query type, specific prompt, AI model tested, mention presence (yes/no), position in response (1st, 2nd, 3rd, etc.), and context notes. This systematic documentation transforms subjective impressions into measurable data you can track over time.

Competitive Visibility Assessment

Your visibility exists in context—specifically, in competitive context. Understanding how competitors appear across the same queries reveals both threats and opportunities in your AI visibility strategy.

Identify 5-7 direct competitors and run them through the exact same prompt library you used for your brand. This comparative analysis reveals critical insights: which competitors dominate AI recommendations, which queries trigger competitive mentions, and where gaps exist in the competitive landscape.

Pay particular attention to how ai brand visibility tracking tools can automate this competitive monitoring process, allowing you to scale your analysis beyond manual testing while maintaining consistency in measurement methodology.

Step 2: Build Your AI Visibility Measurement Framework

Raw observations need structure. You've tested queries and documented responses—now you need a systematic framework that transforms those individual data points into meaningful intelligence you can act on.

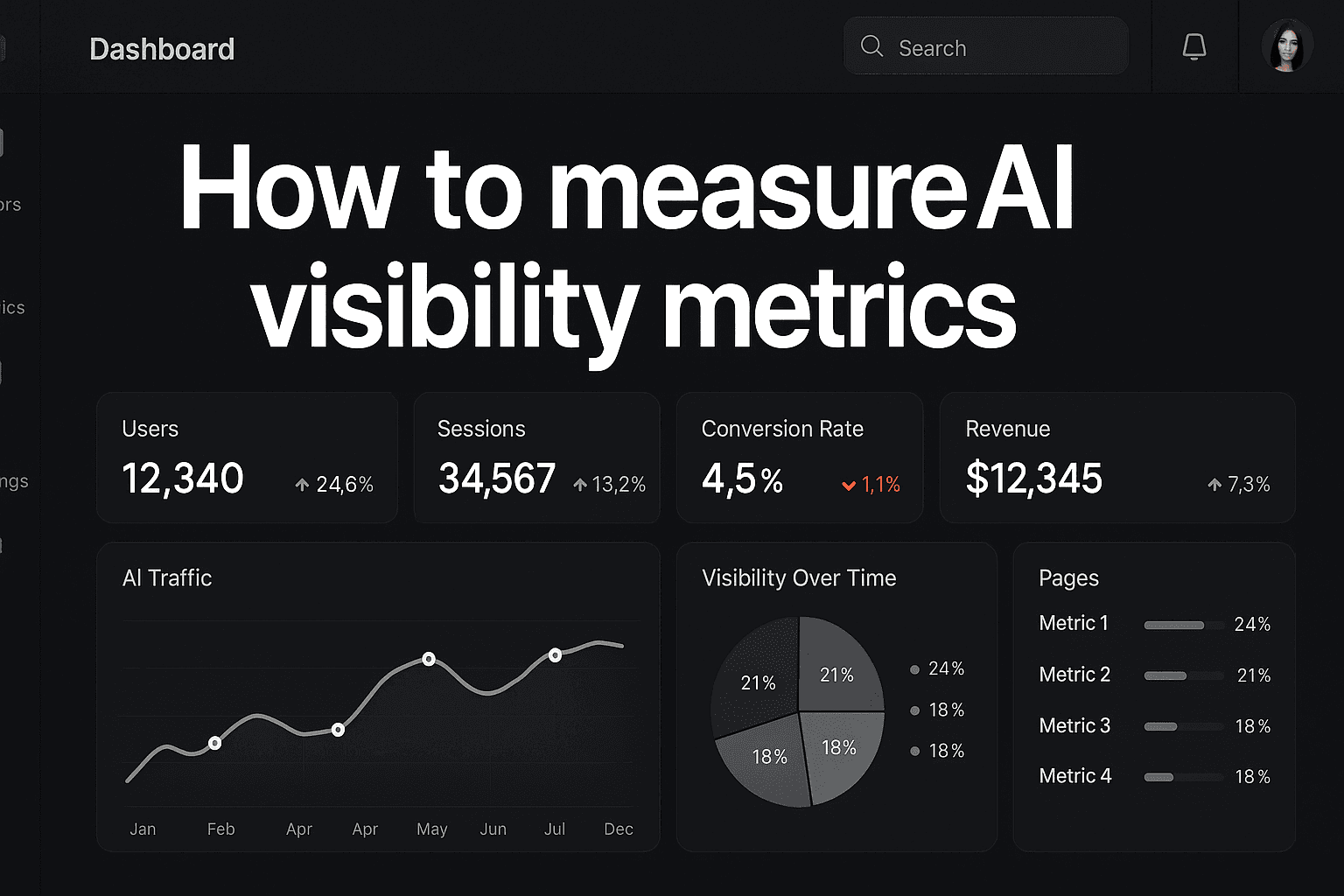

Think of this like building a marketing analytics dashboard. Individual metrics mean little in isolation, but when you organize them into a coherent framework, patterns emerge that reveal exactly where to focus your optimization efforts.

Your measurement framework should capture three dimensions: visibility breadth (how many queries trigger mentions), visibility depth (how prominently you appear), and visibility quality (how favorably you're positioned). Together, these dimensions create a complete picture of your AI presence.

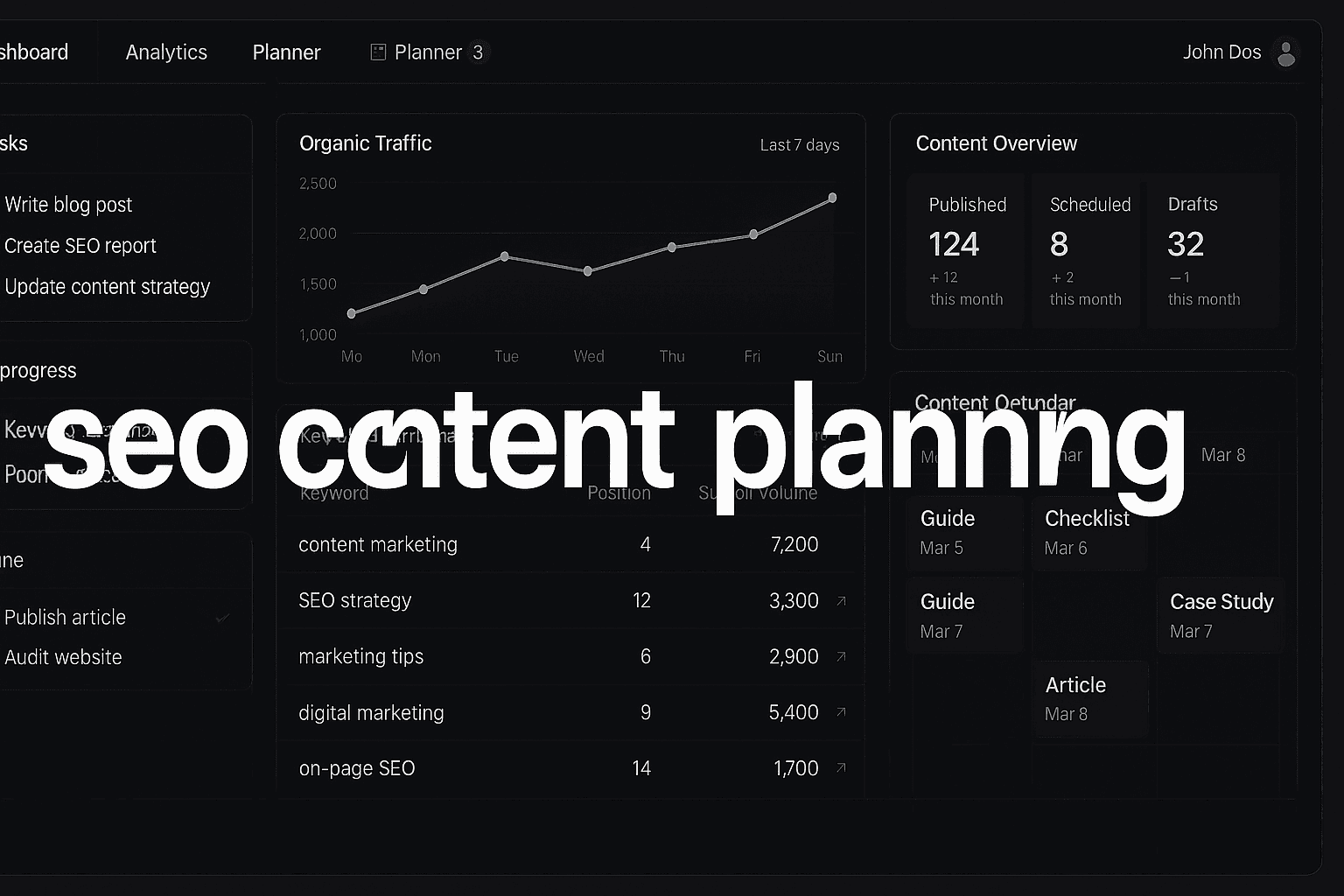

Start by establishing a standardized prompt library—the consistent set of queries you'll test repeatedly over time. This library should include 20-30 prompts across the five query categories we discussed: direct brand queries, category searches, problem-solution queries, comparison requests, and recommendation scenarios.

The key is consistency. Use identical prompts in every measurement cycle so you're comparing apples to apples. When you test "best project management tools for remote teams" in January and again in March, you need the exact same wording to identify meaningful changes in AI responses.

Document your testing protocol with precision: which AI models you'll test (ChatGPT, Claude, Perplexity, Gemini), how many times you'll run each prompt (3-5 iterations recommended), and what time intervals you'll use between tests (to avoid session-based response patterns).

For brands serious about systematic measurement, implementing ai visibility analytics dashboard solutions can centralize this data collection and analysis, transforming manual tracking into automated intelligence gathering.

Step 3: Implement Core AI Visibility Metrics

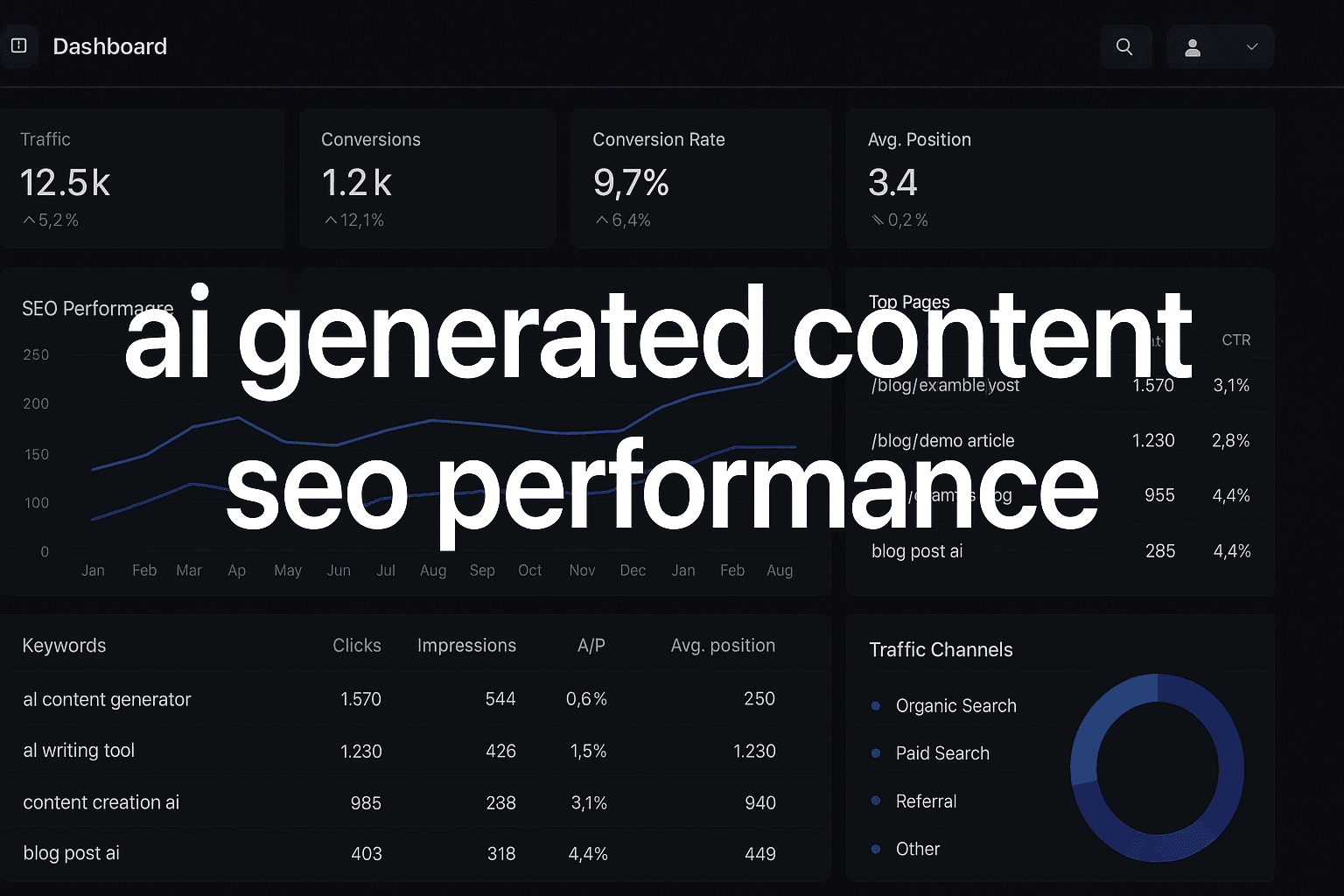

Raw AI responses mean nothing until you transform them into measurable data. You've tested prompts and documented results—now it's time to establish the specific metrics that turn those observations into actionable business intelligence.

Think of this like moving from anecdotal customer feedback to systematic NPS scoring. Individual responses tell you something, but consistent metrics reveal patterns, trends, and opportunities you'd otherwise miss.

Essential AI Visibility Metrics Framework

Effective measurement requires tracking multiple dimensions beyond simple "Did my brand get mentioned?" Start with these five core metrics that capture the full picture of your AI visibility:

Mention Frequency Score (0-10): How often your brand appears across your test prompt library. If you test 20 prompts and your brand appears in 14 responses, your mention frequency is 7/10. This baseline metric reveals your overall visibility footprint.

Position Ranking (1-10+): Where your brand appears in AI responses matters enormously. First mention carries significantly more weight than fifth mention. Track average position across all mentions—a brand mentioned first in 3 responses and third in 2 responses has an average position of 1.8.

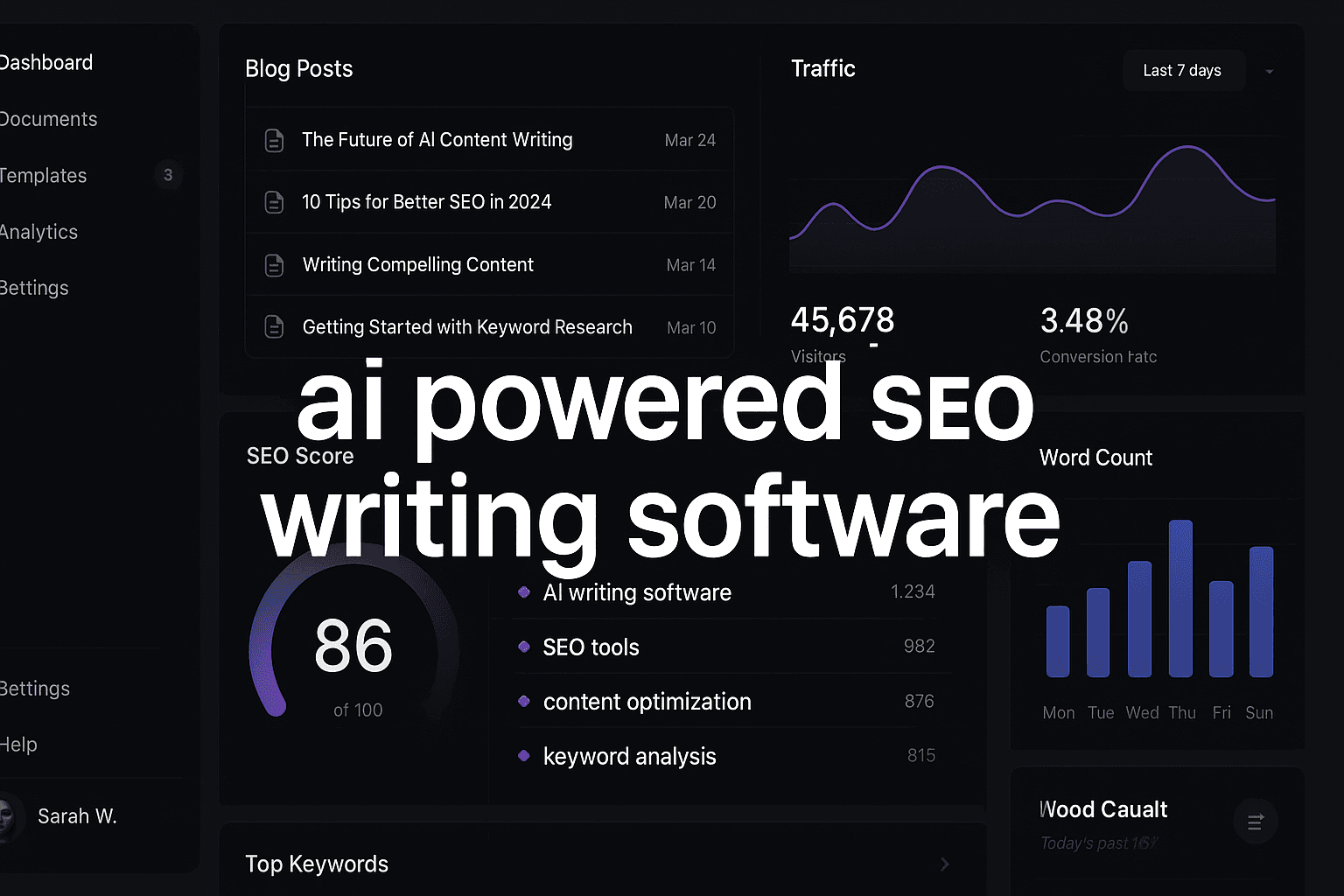

Context Quality Score (1-5): Not all mentions are created equal. Score each mention: 5 for enthusiastic recommendations, 4 for positive mentions, 3 for neutral inclusions, 2 for qualified recommendations ("good but expensive"), 1 for cautionary mentions. For citation-based AI models like Perplexity, learning to track perplexity ai citations specifically adds a crucial dimension to your metrics framework, as citations indicate source authority and credibility signals.

Response Consistency (0-100%): AI models don't always give identical answers to the same prompt. Test each prompt 3-5 times and calculate consistency. If your brand appears in 4 out of 5 tests, that's 80% consistency—revealing how reliably AI models recommend you.

Category Association Strength: How strongly do AI models connect your brand to relevant categories? Track which category terms trigger your brand mentions. If "project management software" mentions you 70% of the time but "team collaboration tools" only 20%, you've identified an association gap worth addressing.

Here's how this works in practice: You test "best CRM software for small businesses" five times across ChatGPT. Your brand appears in 4 responses (mention frequency: 8/10), ranks second in 3 responses and third in 1 response (average position: 2.25), receives positive context in all mentions (context quality: 4.5/5), and appears in 80% of tests (response consistency: 80%). These metrics tell a complete story—strong visibility with room for position improvement.

The key is consistency in scoring criteria. Develop a simple rubric for context quality so different team members score identically. Subjective metrics become reliable when you standardize the evaluation framework.

Sentiment and Context Analysis System

Understanding how AI models position your brand reveals opportunities that raw mention counts miss entirely. Two brands might both appear in 70% of category queries, but if one is described as "the industry leader" while the other is mentioned as "a budget alternative," their actual visibility value differs dramatically.

Develop a sentiment classification system for every brand mention. Use a simple three-tier framework: positive (enthusiastic recommendation, highlighted strengths, positioned as top choice), neutral (included in lists without strong positioning, factual description), or negative (qualified recommendation, highlighted limitations, positioned as inferior alternative).

Beyond sentiment, analyze the specific context surrounding mentions. Does the AI model describe your brand in the first paragraph or bury it in a comprehensive list? Does it lead with your strengths or your limitations? Does it position you as suitable for specific use cases or as a general solution?

This contextual analysis often reveals optimization opportunities invisible in raw metrics. You might discover that AI models consistently mention your brand for enterprise use cases but never for small businesses—even though your product serves both markets. That insight tells you exactly where to focus your how to improve ai search visibility efforts to capture underserved segments.

Step 4: Establish Competitive Benchmarking Protocols

Your visibility metrics mean nothing without competitive context. A 60% mention frequency sounds mediocre until you discover your top competitor only achieves 45%. A third-place average ranking feels disappointing until you realize the category leader averages second place—you're closer than you thought.

Competitive benchmarking transforms absolute metrics into relative performance indicators that reveal your true market position and identify specific opportunities to gain ground on rivals.

Start by identifying your competitive set—the 5-7 brands that AI models most frequently mention alongside yours in category queries. These aren't necessarily your traditional competitors. AI models might group you with brands you've never considered competitive, revealing how the AI ecosystem categorizes your market differently than humans do.

Run your complete prompt library against each competitor, documenting the same metrics you track for your own brand: mention frequency, average position, context quality, response consistency, and category associations. This creates a competitive visibility matrix that shows exactly where you stand relative to rivals.

Pay special attention to competitive positioning patterns. When AI models mention both you and a competitor in the same response, how do they differentiate you? Do they position you as the premium option and the competitor as the budget choice? Do they recommend you for specific use cases and the competitor for others? These positioning patterns reveal how AI models understand your competitive differentiation.

Understanding how to monitor ai model responses across competitive contexts helps you identify not just where you appear, but how your positioning compares to alternatives in the AI-mediated decision-making process.

Step 5: Create Systematic Tracking and Reporting Systems

One-time measurement tells you where you stand today. Systematic tracking reveals trends, validates optimization efforts, and identifies emerging threats before they become critical problems.

The difference between brands that succeed in AI visibility and those that struggle often comes down to measurement discipline. Successful brands treat AI visibility tracking like they treat Google Analytics—as an ongoing practice, not a one-time audit.

Establish a measurement cadence that balances thoroughness with practicality. Monthly tracking works well for most brands—frequent enough to catch meaningful changes, infrequent enough to avoid overwhelming your team. Test your complete prompt library across all target AI models, document results using your standardized metrics, and compare against previous months to identify trends.

Create a simple tracking dashboard that visualizes key metrics over time. Track mention frequency trends, average position changes, context quality evolution, and competitive dynamics. When you can see that your mention frequency increased from 45% to 62% over three months while your top competitor declined from 71% to 68%, you have concrete evidence your optimization efforts are working.

Build alert systems for significant changes. If your mention frequency drops 20% month-over-month, you need to investigate immediately. If a competitor suddenly appears in queries where they were previously absent, that signals a competitive threat worth analyzing. If AI models start describing your brand with negative qualifiers they didn't use before, you've identified a reputation issue requiring attention.

For teams managing AI visibility at scale, implementing perplexity ai brand tracking alongside broader AI model monitoring creates comprehensive coverage across the full AI ecosystem, ensuring no significant visibility changes go unnoticed.

Stop guessing how AI models like ChatGPT and Claude talk about your brand—get visibility into every mention, track content opportunities, and automate your path to organic traffic growth. Start tracking your AI visibility today and see exactly where your brand appears across top AI platforms.