You just searched for your brand in ChatGPT. The results? Three of your competitors mentioned by name, complete with glowing descriptions of their features and benefits. Your company? Nowhere to be found.

This isn't a hypothetical scenario. It's happening right now to brands across every industry as AI language models become the new front door to customer research and decision-making.

While you've been optimizing for Google rankings and tracking traditional SEO metrics, an entirely new visibility landscape has emerged—one where AI assistants like ChatGPT, Claude, and Perplexity are shaping purchasing decisions through conversational recommendations. The problem? Most brands have no idea whether they're being mentioned, how they're being positioned, or why competitors are winning these critical AI-powered conversations.

The stakes couldn't be higher. When a potential customer asks an AI assistant for software recommendations, vendor comparisons, or solution suggestions, the brands that appear in those responses capture mindshare at the most crucial moment—the research phase. The brands that don't exist in these conversations simply don't exist in the buyer's consideration set.

Unlike traditional search where you can track rankings and optimize for specific positions, AI visibility operates on different principles. You're either mentioned or you're not. You're recommended in the right context or you're invisible. And without systematic monitoring, you're flying completely blind.

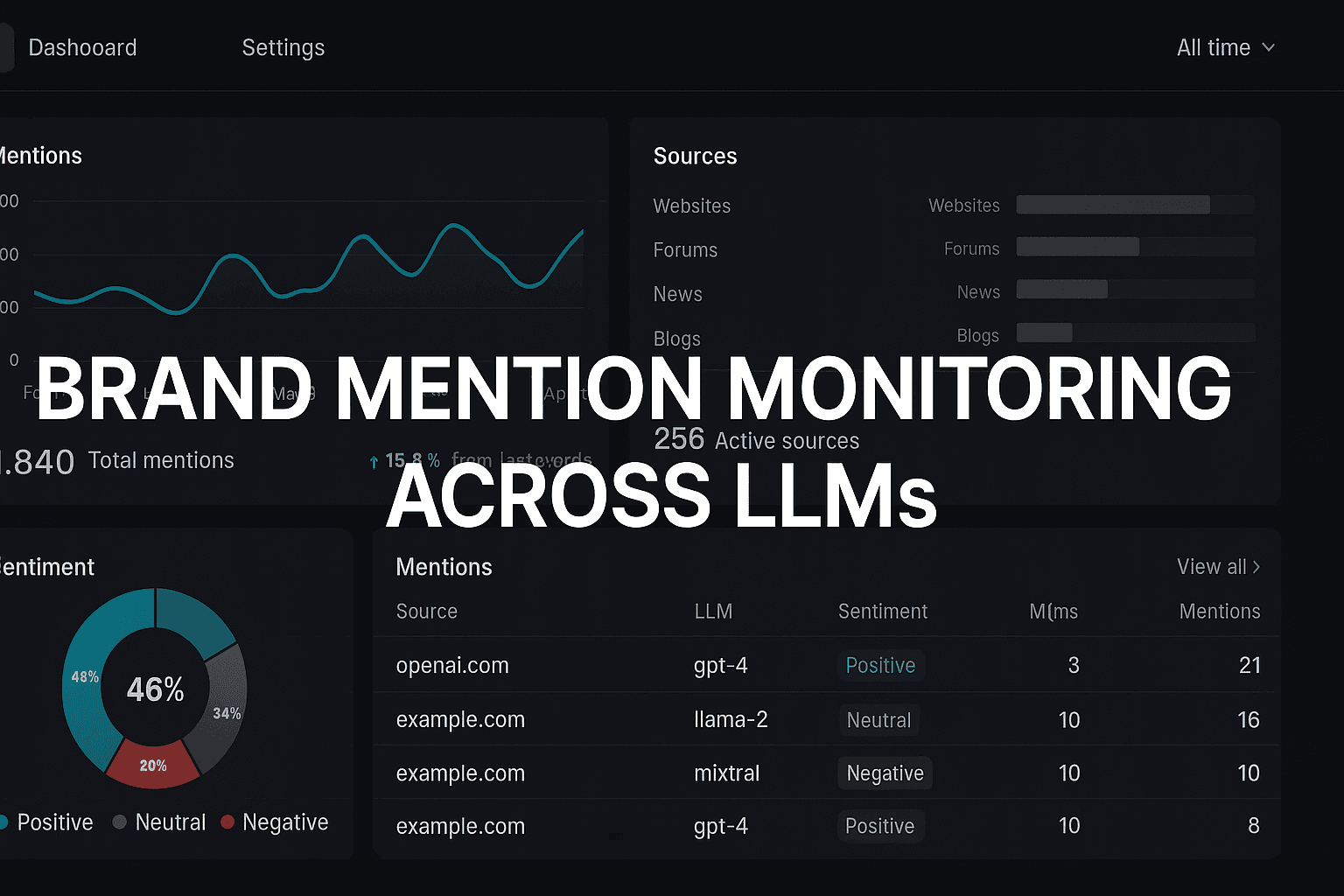

This guide walks you through building a comprehensive brand mention monitoring system across all major LLM platforms. You'll learn how to establish your current AI footprint, create repeatable monitoring protocols, implement cross-platform tracking, and analyze the quality and context of every mention. By the end, you'll have a complete system for understanding and optimizing your brand's presence in AI-powered conversations.

The brands that master AI visibility monitoring today will dominate tomorrow's market conversations. Let's walk through how to build this monitoring system step-by-step.

Step 1: Mapping Your Current AI Footprint

Before you can improve your AI visibility, you need to understand where you stand right now. This baseline assessment reveals the current state of your brand's presence across major LLM platforms—and more importantly, identifies the gaps where competitors are winning conversations you're missing entirely.

Start with the three dominant platforms: ChatGPT, Claude, and Perplexity. These represent the majority of professional AI assistant usage and each operates with different training data and recommendation patterns.

Conducting Your Initial Platform Audit

Open each platform in a separate browser tab and begin with direct brand queries. Type your company name exactly as customers would search for it. Document the complete response—does the AI recognize your brand? How does it describe your offerings? What context does it provide?

The real insights come from category-based queries. Ask "What are the best [your product category] tools?" or "Which companies offer [your service]?" These queries reveal whether you appear in recommendation lists alongside competitors. If three competitors get mentioned while you're invisible, you've identified a critical visibility gap.

Test query variations to understand context-dependent visibility. Try "affordable [category] solutions," "enterprise [category] platforms," and "best [category] for small businesses." Your brand might appear in some contexts but not others, revealing specific positioning weaknesses.

Creating Your Baseline Documentation

Build a simple spreadsheet with columns for Platform, Query Type, Your Brand Mentioned (Yes/No), Competitors Mentioned, Sentiment (Positive/Neutral/Negative), and Context. This structure enables pattern recognition across platforms and query types.

Take screenshots of every response. AI models update their training data periodically, and these screenshots become your proof of visibility changes over time. Store them in organized folders by platform and date.

For each mention—or notable absence—document the specific language used. When ChatGPT describes your competitor as "industry-leading" but positions your brand as "emerging," that language difference matters. When Claude recommends three alternatives without mentioning you at all, note the exact query that triggered that response.

As you develop your baseline queries, understanding the broader principles of track ai recommendations will help you design more effective query variations that capture different recommendation scenarios and competitive positioning contexts.

Identifying Critical Visibility Gaps

Compare your results across platforms. You might discover that ChatGPT mentions your brand in certain contexts while Claude doesn't mention you at all. These platform-specific gaps indicate different training data sources and suggest where to focus initial optimization efforts.

Pay special attention to high-intent queries—the questions potential customers actually ask when evaluating solutions. If you're invisible in responses to "best [category] for [specific use case]" queries, you're losing qualified prospects at the research stage.

Document competitor positioning patterns. When AI models consistently recommend the same three competitors across different query types, they've achieved strong category association. Your goal is to understand what triggers those recommendations so you can replicate that visibility for your own brand.

This baseline assessment typically takes 2-3 hours for thorough coverage across all three platforms. The investment pays off immediately—you now have concrete data showing exactly where

Step 1: Essential Tools and Platform Access Setup

Before you can monitor your brand's AI visibility, you need the right infrastructure in place. Think of this as assembling your monitoring toolkit—without these foundational elements, you'll be flying blind or wasting time with inefficient manual processes.

The good news? You don't need expensive enterprise software to get started. Most of what you need is either free or available through standard business subscriptions you may already have.

Required Monitoring Infrastructure

Start with access to the three major LLM platforms that dominate professional usage: ChatGPT, Claude, and Perplexity. Each operates with different training data and recommendation patterns, which means your brand visibility can vary dramatically across platforms.

For ChatGPT, a free account provides basic access, but ChatGPT Plus ($20/month) offers more reliable availability and access to GPT-4, which often provides more detailed and nuanced responses. Claude offers similar free and paid tiers through Claude Pro ($20/month). Perplexity provides free searches with limited daily queries, while Perplexity Pro ($20/month) removes these restrictions and adds advanced features.

While manual monitoring provides full control over your research process, dedicated ai brand monitoring platforms can streamline the entire setup process and provide automated tracking capabilities that scale beyond manual methods.

Beyond platform access, you'll need a systematic way to document and track your findings. A simple spreadsheet works perfectly for most brands starting out. Create columns for date, platform, query used, mention status, context, sentiment, and any relevant notes. This becomes your monitoring database.

Browser organization matters more than you might think. Set up dedicated bookmark folders for each LLM platform and create quick-access shortcuts. You'll be cycling through these platforms regularly, and fumbling with URLs wastes time and breaks your monitoring rhythm.

Account Setup and Access Credentials

Create separate accounts for each platform using a dedicated email address if possible. This keeps your monitoring activities organized and prevents your personal usage from contaminating your brand research data.

For ChatGPT, sign up at chat.openai.com and consider upgrading to Plus if you plan to run frequent queries. The paid tier provides priority access during peak times and ensures you're working with the most capable model version.

Claude requires an Anthropic account at claude.ai. The free tier works for initial baseline research, but Pro becomes essential once you're running regular monitoring cycles. Claude's longer context window makes it particularly valuable for analyzing detailed brand mentions.

Perplexity setup at perplexity.ai is straightforward, but pay attention to the daily query limits on free accounts. If you're monitoring multiple brand variations and competitor mentions, you'll hit these limits quickly. Pro access removes this friction entirely.

Configure browser profiles or use incognito mode when conducting baseline research to avoid personalized results that might skew your findings. LLMs can sometimes adjust responses based on conversation history, and you want clean, unbiased data.

Set aside 30-45 minutes for complete setup. This includes account creation, subscription activation if you're going with paid tiers, spreadsheet template creation, and browser bookmark organization. Rushing through setup creates friction later when you're trying

Step 1: Mapping Your Current AI Footprint

Before you can improve your AI visibility, you need to understand where you stand right now. This baseline assessment reveals the current state of your brand's presence across major LLM platforms—and more importantly, identifies the gaps where competitors are winning conversations you're missing entirely.

Next, shift to category-based queries. Instead of asking about your brand directly, ask questions your potential customers would ask: "What are the best project management tools for remote teams?" or "Which CRM platforms work well for small businesses?" These queries reveal whether you appear in recommendation contexts—the scenarios that actually drive purchasing decisions.

Run at least five different query variations per platform. Ask about competitors by name and note whether your brand appears in comparison responses. Search for industry problems your product solves and see if the AI suggests your solution. The goal is understanding not just if you're mentioned, but when and why.

Creating Your Baseline Documentation

Create a simple spreadsheet with columns for platform, query type, date, mention status, and context. For each query, record whether your brand appeared, how it was positioned, and what competitors were mentioned alongside or instead of you.

Screenshot every response that mentions your brand or competitors. These visual records become invaluable for tracking changes over time and identifying patterns in how AI models discuss your market category.

Pay special attention to sentiment and positioning. When your brand is mentioned, is it recommended enthusiastically or mentioned as an afterthought? Are you positioned as a leader, an alternative, or a budget option? This qualitative assessment matters as much as simple mention counts.

Document competitive patterns too. Which competitors appear most frequently? In what contexts do they dominate recommendations? Understanding competitive visibility helps you identify content gaps and positioning opportunities that could improve your own AI presence.

This baseline becomes your benchmark for measuring future improvements. Without it, you're optimizing blind—unable to tell whether your efforts are actually moving the needle on AI visibility.

Step 2: Building Repeatable Query Protocols

Random, inconsistent queries produce random, inconsistent data. The difference between useful monitoring and wasted effort comes down to one thing: systematic query protocols that generate comparable results every single time.

Think of it like A/B testing. If you change multiple variables simultaneously, you can't identify what's driving results. The same principle applies to LLM monitoring—standardized queries let you track real visibility changes rather than variations caused by different question phrasing.

Designing Platform-Specific Query Sets

Each LLM platform responds differently to query structures. ChatGPT excels with conversational prompts, Claude prefers analytical frameworks, and Perplexity optimizes for search-style queries. Your protocol needs to account for these differences.

Start with three query categories for each platform: direct brand queries, category-based searches, and competitor comparison requests. For direct queries, use exact phrasing: "What do you know about [Your Brand]?" For category searches, frame it naturally: "What are the best [category] solutions for [specific use case]?" For comparisons, be explicit: "Compare [Your Brand] with [Competitor A] and [Competitor B]."

Effective query protocols require understanding not just what to ask, but how to systematically monitor ai model responses across different contexts, use cases, and time periods.

Create query variations that test different contexts. If you sell project management software, don't just ask "What are the best project management tools?" Test specific scenarios: "What project management software works best for remote teams?" or "Which project management tools integrate with Slack?" These variations reveal whether your brand appears in niche contexts or only broad category searches.

Document every query exactly as you'll use it. Create a spreadsheet with columns for platform, query text, query category, and expected response type. This becomes your monitoring script—copy and paste these exact queries during each monitoring session to ensure consistency.

Creating Sustainable Monitoring Schedules

Daily monitoring sounds thorough but leads to burnout and abandoned systems. LLM training data doesn't change that frequently, and neither should your monitoring cadence.

For most brands, weekly monitoring provides the right balance between staying informed and maintaining sustainability. Choose a specific day and time—Tuesday mornings work well because they avoid Monday chaos and Friday wind-down. Block 90 minutes on your calendar and treat it like any other critical business meeting.

Your monitoring session should follow a consistent sequence: ChatGPT queries first, then Claude, then Perplexity. Run through your complete query set for each platform before moving to the next. This assembly-line approach maintains focus and reduces the mental switching cost between platforms.

For brands in rapidly evolving industries or during active PR campaigns, increase frequency to twice weekly. For established brands in stable markets, bi-weekly monitoring may suffice. The key is consistency—better to monitor bi-weekly forever than weekly for two months before abandoning the system.

Set calendar reminders with your query spreadsheet linked directly in the event. When the reminder fires, you should be one click away from starting your monitoring session. Friction kills consistency, so eliminate every possible obstacle between intention and execution.

Step 3: Implementing Cross-Platform Tracking SystemsYou've mapped your baseline and built your query protocols. Now comes the critical step: deploying active monitoring across all major platforms simultaneously while maintaining a unified view of your brand's AI presence.

The challenge isn't just monitoring each platform—it's creating a system that lets you see patterns, compare performance, and identify opportunities across the entire LLM landscape. When ChatGPT mentions you positively but Claude doesn't mention you at all, that's actionable intelligence. When Perplexity cites your content but positions a competitor first, you need to know immediately.

Platform-Specific Monitoring Deployment

Start with ChatGPT since it commands the largest user base. Open a dedicated browser profile or use containers to keep your monitoring sessions separate from personal use. This prevents your search history from influencing responses and ensures consistent results.

Create a master conversation thread titled "Brand Monitoring - [Current Month]" and run your complete query set. Document every response in your tracking spreadsheet with timestamps, exact queries used, and full AI responses. For comprehensive chatgpt visibility monitoring strategies beyond basic setup, including conversation threading techniques and advanced prompt optimization, explore platform-specific best practices that can dramatically improve your monitoring accuracy.

Move to Claude next. Claude's analytical capabilities make it particularly valuable for understanding the reasoning behind recommendations. When Claude mentions your brand, it often explains why—giving you insights into what factors drive AI recommendations. Use the same query set but pay special attention to how Claude structures its responses differently than ChatGPT.

Deploy Perplexity monitoring last. Perplexity's unique source attribution system means you can verify exactly which content influenced each mention. This makes it invaluable for understanding the connection between your content strategy and AI visibility. Specialized perplexity ai brand tracking approaches that leverage its source transparency can provide competitive intelligence you simply can't get from other platforms.

Creating Unified Tracking Dashboards

Build a master spreadsheet with separate tabs for each platform but a unified summary dashboard. Your summary view should show: total mentions per platform, sentiment breakdown, competitive positioning, and week-over-week changes.

Structure your platform-specific tabs with these columns: Date, Query Used, Mention (Yes/No), Position (if listed with competitors), Sentiment (Positive/Neutral/Negative), Context Notes, and Screenshot Link. This standardization makes cross-platform analysis possible.

Create conditional formatting rules that highlight significant changes—new mentions appearing, sentiment shifts, or competitive displacement. Your dashboard should let you spot trends at a glance without digging through raw data.

Setting Up Automated Alert Systems

While full automation requires specialized tools, you can create basic alerts using spreadsheet formulas. Set up notifications when mention counts drop below baseline, when negative sentiment appears, or when competitors gain mentions in categories where you previously dominated.

The key is defining meaningful thresholds. A single negative mention might not warrant immediate action, but three negative mentions in one week across different platforms signals a problem requiring investigation.

Schedule your monitoring sessions consistently

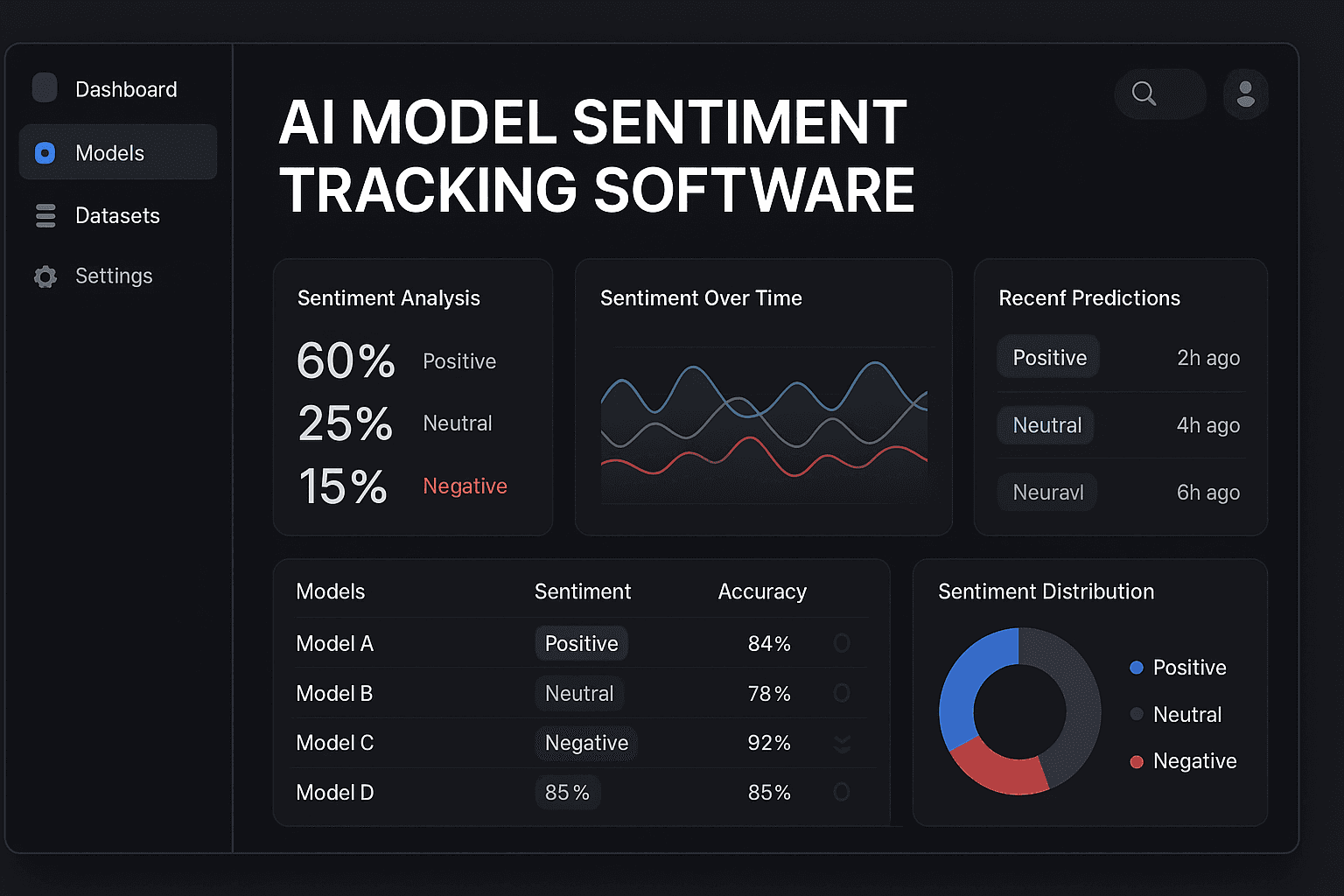

Step 6: Advanced Sentiment Analysis and Context Tracking

Counting mentions tells you whether your brand appears in AI conversations. Analyzing sentiment tells you whether those appearances help or hurt your positioning. This distinction matters more than most brands realize—a single negative mention in the right context can do more damage than ten absent mentions.

The challenge? LLMs don't provide sentiment scores or context labels. You need to develop systematic evaluation frameworks that turn qualitative responses into actionable intelligence about how your brand is actually being positioned.

Building Your Sentiment Scoring Framework

Start with a simple three-tier system: positive, neutral, and negative. But add nuance by scoring intensity on a scale of 1-5 within each category. A lukewarm positive mention ("Brand X is an option worth considering") scores differently than an enthusiastic recommendation ("Brand X consistently outperforms competitors in this category").

Create a scoring rubric based on specific language patterns. Positive indicators include words like "leading," "innovative," "recommended," and "excels." Negative signals appear in phrases like "limited features," "higher cost," or "may not be suitable for." Neutral mentions typically describe your brand factually without comparative language or value judgments.

Document every mention with its full context—the question asked, your brand's position in the response, and any competitor comparisons. Understanding real time brand perception in ai responses requires analyzing not just sentiment polarity, but contextual positioning, competitive comparisons, and recommendation confidence levels.

Pay special attention to conditional recommendations. When an LLM says "Brand X works well for enterprise clients but smaller businesses might prefer alternatives," you're seeing segmentation that reveals both strengths and limitations in how AI models understand your positioning.

Identifying Mention Triggers and Patterns

The questions that generate brand mentions reveal critical insights about your perceived authority and relevance. Track which query types consistently produce mentions versus which leave you invisible.

Create trigger categories: direct brand queries, category searches ("best project management software"), use case questions ("tools for remote team collaboration"), and comparison requests ("alternatives to [competitor]"). Your mention rate should be highest for direct queries and strong for relevant category searches.

When mention patterns show gaps—appearing in category searches but not use case questions, for example—you've identified content opportunities. Advanced sentiment analysis for ai recommendations reveals how emotional context in your content influences whether AI models recommend your brand in positive, neutral, or negative contexts.

Look for seasonal patterns and trending topics that affect mention frequency. If your brand appears more often during specific times of year or in response to industry news, you're seeing how training data recency and topical relevance influence AI visibility.

The most valuable insight? Understanding which competitor mentions correlate with your absence. When specific competitors consistently appear in contexts where you don't, you've found your most critical visibility gaps—and your highest-priority optimization targets.

Putting It All Together

You now have a complete system for monitoring your brand's presence across every major AI platform. From establishing your baseline footprint to implementing repeatable query protocols, cross-platform tracking, and sentiment analysis—you've built the infrastructure that reveals exactly where your brand appears in AI-powered conversations.

The key is consistency. Your monitoring system only delivers value when you maintain regular query schedules, document findings systematically, and act on the insights you uncover. Start with weekly monitoring across ChatGPT, Claude, and Perplexity. As patterns emerge, you'll identify the query types that matter most for your industry and the contexts where your brand needs stronger visibility.

Remember that AI visibility isn't static. Training data updates, algorithm changes, and competitive content strategies constantly shift the landscape. The brands that win are those that monitor continuously, adapt quickly, and optimize their content based on real mention data rather than assumptions.

Your next step is simple: run your first complete monitoring cycle this week. Document your baseline, set up your tracking spreadsheet, and establish your query schedule. Within 30 days, you'll have trend data that reveals whether your visibility is improving, declining, or stagnating—and more importantly, you'll understand why.

Ready to automate this entire process and get deeper insights without the manual work? Start tracking your AI visibility today with Sight AI's automated monitoring platform that handles the heavy lifting while you focus on optimization strategy.