As large language models become central to business operations—from customer support chatbots to content generation pipelines—monitoring their performance, costs, and outputs has shifted from optional to essential. Without proper observability, teams face unpredictable API costs, degraded response quality, and blind spots in how AI represents their brand.

This guide evaluates the leading LLM monitoring tools available today, covering solutions for everything from basic usage tracking to comprehensive AI visibility monitoring. Whether you're managing internal LLM deployments or tracking how external AI models mention your brand, you'll find a tool that fits your monitoring needs.

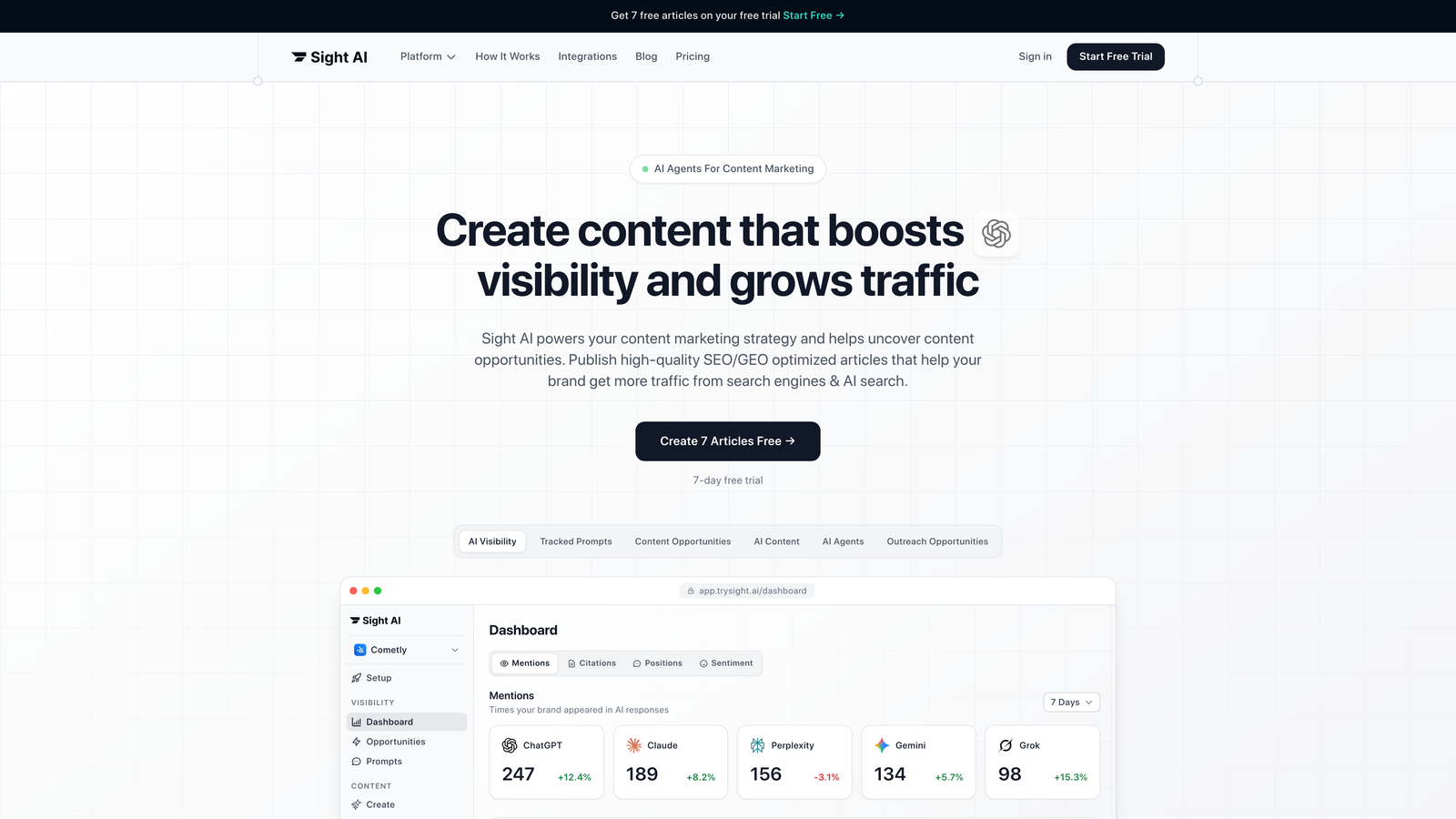

1. Sight AI

Best for: Tracking brand mentions and recommendations across major AI platforms

Sight AI is an AI visibility monitoring platform that tracks how brands are mentioned and recommended across ChatGPT, Claude, Perplexity, and other major AI models.

Where This Tool Shines

While most LLM monitoring tools focus on technical metrics for your own deployments, Sight AI addresses a different critical need: understanding how external AI models talk about your brand. When someone asks ChatGPT for product recommendations in your category, does your brand appear? What's the sentiment? Which prompts trigger mentions?

This platform fills a gap that technical observability tools simply don't address. You're not monitoring your own API calls—you're monitoring the AI ecosystem's perception and recommendation patterns for your brand.

Key Features

Brand Mention Tracking: Monitors your brand across 6+ AI platforms including ChatGPT, Claude, Perplexity, and emerging AI search engines.

AI Visibility Score: Quantifies your brand's presence with sentiment analysis to understand positive, neutral, or negative mentions.

Prompt Intelligence: Reveals exactly what queries and contexts trigger AI models to mention or recommend your brand.

Competitive Benchmarking: Tracks how competitors appear in AI recommendations alongside your brand for strategic positioning.

Content Opportunities: Identifies gaps where improved content could increase your brand's AI visibility and recommendation frequency.

Best For

Marketing teams and brands concerned with AI-driven discovery and recommendations. Particularly valuable for companies in competitive categories where AI chatbots increasingly influence purchase decisions and research behavior.

Pricing

Contact for pricing based on monitoring scope and brand tracking requirements.

2. Langfuse

Best for: Open-source LLM observability with self-hosting options

Langfuse is an open-source LLM engineering platform providing observability, analytics, and prompt management for LLM applications.

Where This Tool Shines

Langfuse appeals to teams that value transparency and control. The open-source foundation means you can inspect every aspect of how monitoring works, customize it to your needs, and deploy it entirely within your infrastructure if compliance requires it.

The platform excels at detailed request tracing that helps developers understand exactly what's happening inside complex LLM chains. When a response goes wrong, you can trace back through every step to identify the issue.

Key Features

Request Tracing: Detailed logs of every LLM call with full context, making debugging significantly easier than guessing from error messages.

Prompt Management: Version control for prompts with A/B testing capabilities to optimize outputs systematically.

Self-Hosted Deployment: Run entirely on your infrastructure for maximum data control and compliance with strict security policies.

Provider Integration: Works with OpenAI, Anthropic, Cohere, and other major LLM providers through a unified interface.

Cost Analytics: Tracks token usage and associated costs across different models and application components.

Best For

Engineering teams building LLM applications who want full control over their monitoring infrastructure, especially those with strict data governance requirements or technical teams comfortable with open-source tooling.

Pricing

Free tier available for self-hosting; managed cloud plans start at $59/month with additional usage-based fees.

3. Helicone

Best for: Quick integration with focus on cost optimization

Helicone is an LLM observability platform focused on cost optimization, usage analytics, and request logging with minimal integration effort.

Where This Tool Shines

Helicone's one-line proxy integration is genuinely impressive. You change your API endpoint, and suddenly you have comprehensive logging without touching application code. For teams moving fast or dealing with legacy systems, this simplicity is valuable.

The cost tracking dashboards make it immediately clear which parts of your application are burning through tokens. When you're dealing with unpredictable LLM costs that can spike unexpectedly, this visibility becomes essential for budget management.

Key Features

Proxy Integration: Add monitoring by simply routing requests through Helicone's proxy—no SDK installation or code changes required.

Real-Time Dashboards: Live cost tracking with breakdowns by model, user, feature, or any custom dimension you define.

Response Caching: Automatically caches identical requests to reduce costs and improve latency for repeated queries.

User Analytics: Track usage patterns at the user level to identify power users or abuse patterns.

Budget Alerts: Set spending thresholds with automatic alerts when costs exceed expected ranges.

Best For

Teams prioritizing rapid deployment and cost control, particularly startups or projects where LLM expenses are a significant concern and integration complexity needs to stay minimal.

Pricing

Free tier includes 100K requests monthly; Pro plan starts at $20/month with higher limits and additional features.

4. Weights & Biases Prompts

Best for: Teams already using W&B for ML experiment tracking

Weights & Biases Prompts is LLM monitoring and prompt management integrated into the popular ML experiment tracking platform.

Where This Tool Shines

If you're already using Weights & Biases for traditional ML work, adding LLM monitoring feels natural. The same experiment tracking mindset applies—you're essentially treating prompt iterations as experiments with measurable outcomes.

The platform's strength lies in systematic prompt optimization. You can compare different prompt versions side-by-side with quantitative metrics, making it easier to move beyond subjective "this prompt feels better" decisions.

Key Features

Prompt Versioning: Track every prompt iteration with automatic version control and comparison tools for systematic optimization.

Experiment Integration: Connects LLM monitoring with broader ML experiments for teams working across traditional and generative AI.

Evaluation Pipelines: Automated testing frameworks to assess output quality across different prompts and model versions.

Collaboration Tools: Team features for sharing prompts, reviewing changes, and coordinating optimization efforts.

Artifact Management: Centralized storage for prompts, datasets, and evaluation results with full lineage tracking.

Best For

ML teams already invested in the Weights & Biases ecosystem who want unified observability across traditional and generative AI projects.

Pricing

Free for individual use; Team plans start at $50/user/month with enterprise options available for larger organizations.

5. Arize AI

Best for: Enterprise ML observability with LLM-specific capabilities

Arize AI is an enterprise ML observability platform with dedicated LLM monitoring capabilities for production deployments.

Where This Tool Shines

Arize brings enterprise-grade ML monitoring expertise to LLM applications. The platform understands that production AI systems require more than basic logging—they need sophisticated drift detection, performance degradation alerts, and root cause analysis.

The embedding drift detection is particularly valuable. LLM outputs can degrade subtly over time as models update or usage patterns shift. Arize helps you catch these issues before they impact user experience.

Key Features

LLM Evaluation Metrics: Purpose-built metrics for assessing response quality, relevance, toxicity, and other LLM-specific concerns.

Embedding Drift Detection: Monitors how vector representations change over time to catch subtle performance degradation.

Automated Monitoring: Continuous performance tracking with intelligent alerting when metrics fall outside expected ranges.

Root Cause Analysis: Diagnostic tools that help identify why performance degraded and which factors contributed.

Enterprise Security: SOC 2 compliance, role-based access control, and audit logging for regulated industries.

Best For

Large organizations running mission-critical LLM applications in production who need enterprise-grade monitoring, compliance features, and sophisticated performance analysis capabilities.

Pricing

Free tier available for small-scale testing; enterprise pricing provided on request based on deployment scale and feature requirements.

6. Datadog LLM Observability

Best for: Unified monitoring with existing infrastructure observability

Datadog LLM Observability is LLM monitoring capabilities integrated into Datadog's comprehensive infrastructure monitoring platform.

Where This Tool Shines

If you're already using Datadog for infrastructure monitoring, adding LLM observability creates a unified view of your entire stack. You can correlate LLM performance with database latency, API gateway errors, or any other infrastructure metric in the same dashboard.

This integration is powerful when debugging complex issues. Is your LLM responding slowly because the model is slow, or because your database is struggling? With everything in one platform, answering these questions becomes straightforward.

Key Features

Unified Monitoring: LLM metrics alongside infrastructure, application, and network monitoring in a single platform.

Token Usage Tracking: Detailed cost analytics with breakdowns by service, endpoint, or custom tags you define.

Performance Metrics: Latency, error rates, and throughput monitoring with correlation to infrastructure health.

Custom Dashboards: Flexible visualization tools that let you combine LLM metrics with any other monitored data.

APM Integration: Full-stack tracing that connects LLM calls to broader application performance data.

Best For

Organizations already using Datadog for infrastructure monitoring who want to add LLM observability without introducing another platform and maintaining multiple monitoring tools.

Pricing

LLM Observability included with APM subscriptions; pricing starts at $31/host/month with usage-based components for trace ingestion.

7. Portkey

Best for: Multi-provider LLM applications with routing and fallbacks

Portkey is an LLM gateway platform combining routing, caching, and observability for applications using multiple AI providers.

Where This Tool Shines

Portkey excels when you're using multiple LLM providers and need intelligent routing between them. The automatic fallback capabilities mean if OpenAI is down, requests seamlessly route to Anthropic without manual intervention or code changes.

The response caching is smart enough to recognize semantically similar requests, not just exact matches. This can significantly reduce costs for applications with common query patterns.

Key Features

Multi-Provider Routing: Intelligent load balancing across OpenAI, Anthropic, Cohere, and other providers based on cost, latency, or custom rules.

Automatic Fallbacks: Seamless failover to backup providers when primary services experience outages or rate limits.

Response Caching: Semantic caching that recognizes similar requests to reduce costs and improve response times.

Request Analytics: Comprehensive logging and metrics across all providers in a unified interface.

Virtual Keys: Budget management with spending limits per key, user, or feature to prevent cost overruns.

Best For

Applications using multiple LLM providers who need reliability through redundancy, cost optimization through intelligent routing, and simplified management of multiple API keys.

Pricing

Free tier includes 10K requests monthly; Growth plan starts at $49/month with higher limits and advanced routing features.

8. Traceloop (OpenLLMetry)

Best for: Vendor-agnostic observability using OpenTelemetry standards

Traceloop is open-source LLM instrumentation using OpenTelemetry standards for vendor-agnostic observability.

Where This Tool Shines

Traceloop's commitment to OpenTelemetry standards means your instrumentation isn't locked to a specific vendor. You can send traces to any OpenTelemetry-compatible backend—Jaeger, Zipkin, your own custom solution, or commercial platforms.

The automatic instrumentation for popular LLM frameworks saves significant development time. Instead of manually logging every LLM call, the SDK automatically captures relevant data across LangChain, LlamaIndex, and other common tools.

Key Features

OpenTelemetry Native: Uses industry-standard instrumentation protocols that work with any compatible observability backend.

Existing Backend Support: Send traces to your current observability tools rather than adopting yet another platform.

Framework Auto-Instrumentation: Automatic tracing for LangChain, LlamaIndex, and other popular LLM frameworks without manual logging.

Vendor Agnostic: Avoid lock-in with a data format that works across different monitoring solutions.

Open-Source SDK: Full transparency into how instrumentation works with community contributions and customization options.

Best For

Teams committed to open standards who want flexibility in choosing observability backends, or organizations already invested in OpenTelemetry infrastructure.

Pricing

Open-source SDK is free; managed platform with additional features available with pricing provided on request.

9. LangSmith

Best for: Debugging and testing LangChain applications

LangSmith is a developer platform for debugging, testing, and monitoring LLM applications built with LangChain.

Where This Tool Shines

If you're building with LangChain, LangSmith offers the tightest integration possible. The platform understands LangChain's chains, agents, and tools natively, making debugging far more intuitive than generic logging solutions.

The dataset management for testing is particularly valuable. You can build test suites for your LLM applications, run evaluations automatically, and catch regressions before they reach production.

Key Features

Native LangChain Integration: Purpose-built for LangChain with deep understanding of chains, agents, and framework-specific concepts.

Run Tracing: Detailed execution traces showing exactly how data flows through complex LangChain pipelines.

Dataset Management: Organize test cases and evaluation data for systematic testing of LLM application behavior.

Evaluation Frameworks: Built-in tools for assessing output quality with custom metrics and automated testing.

Prompt Hub: Centralized repository for sharing and discovering prompts across teams and projects.

Best For

Development teams building LLM applications with LangChain who need specialized debugging and testing tools that understand the framework's architecture.

Pricing

Free tier includes 5K traces monthly; Plus plan starts at $39/month with higher trace limits and additional collaboration features.

Making the Right Choice

The right LLM monitoring tool depends on what you're actually trying to monitor. The market has split into two distinct categories with different purposes.

For brand visibility tracking across external AI models like ChatGPT and Perplexity, Sight AI offers purpose-built capabilities that technical observability tools simply don't address. If your concern is how AI chatbots mention and recommend your brand, you need visibility into those external platforms—not just your own API calls.

For internal LLM deployments, your choice depends on several factors. Open-source advocates will appreciate Langfuse's transparency and self-hosting options, while teams prioritizing rapid deployment should consider Helicone's one-line integration. Enterprise organizations running mission-critical applications will find Arize's sophisticated monitoring and compliance features worth the investment.

LangChain users should seriously evaluate LangSmith for its native framework integration. If you're already using Datadog for infrastructure monitoring, adding LLM observability through the same platform creates valuable unified visibility. Teams working with multiple LLM providers benefit from Portkey's intelligent routing and fallback capabilities.

Many organizations benefit from combining tools. You might use technical observability like Langfuse for your own LLM implementations while separately tracking brand mentions across external AI platforms with Sight AI. These aren't competing needs—they're complementary aspects of comprehensive AI monitoring.

Consider your specific requirements: Do you need self-hosting for compliance? Is cost optimization your primary concern? Are you building with specific frameworks like LangChain? Do you need to track how external AI models represent your brand? Answer these questions before choosing, because the "best" tool varies significantly based on your actual monitoring needs.

Start tracking your AI visibility today and see exactly where your brand appears across top AI platforms. Stop guessing how AI models like ChatGPT and Claude talk about your brand—get visibility into every mention, track content opportunities, and automate your path to organic traffic growth.