Your brand could be invisible to millions of potential customers right now, and you'd have no idea. When someone asks ChatGPT about solutions in your industry, does your company even get mentioned? If Claude recommends competitors instead of you, traditional analytics won't tell you—they only track what happens after someone reaches your website, not the AI-powered conversations happening before they ever click.

This visibility gap represents a fundamental shift in how businesses get discovered. AI assistants have become the new front door for customer research, yet most companies are flying blind. Worse, the AI systems powering your own products might be degrading in accuracy or generating problematic responses without triggering any alerts.

The solution? A new generation of AI monitoring tools designed specifically for this challenge. Some track your brand visibility across AI platforms—essentially SEO for the AI era—while others monitor your own AI systems for performance, accuracy, and reliability. The best platforms are starting to bridge both worlds.

Here are the 10 leading AI monitoring tools that help you track performance, visibility, and brand mentions across the AI landscape in 2025.

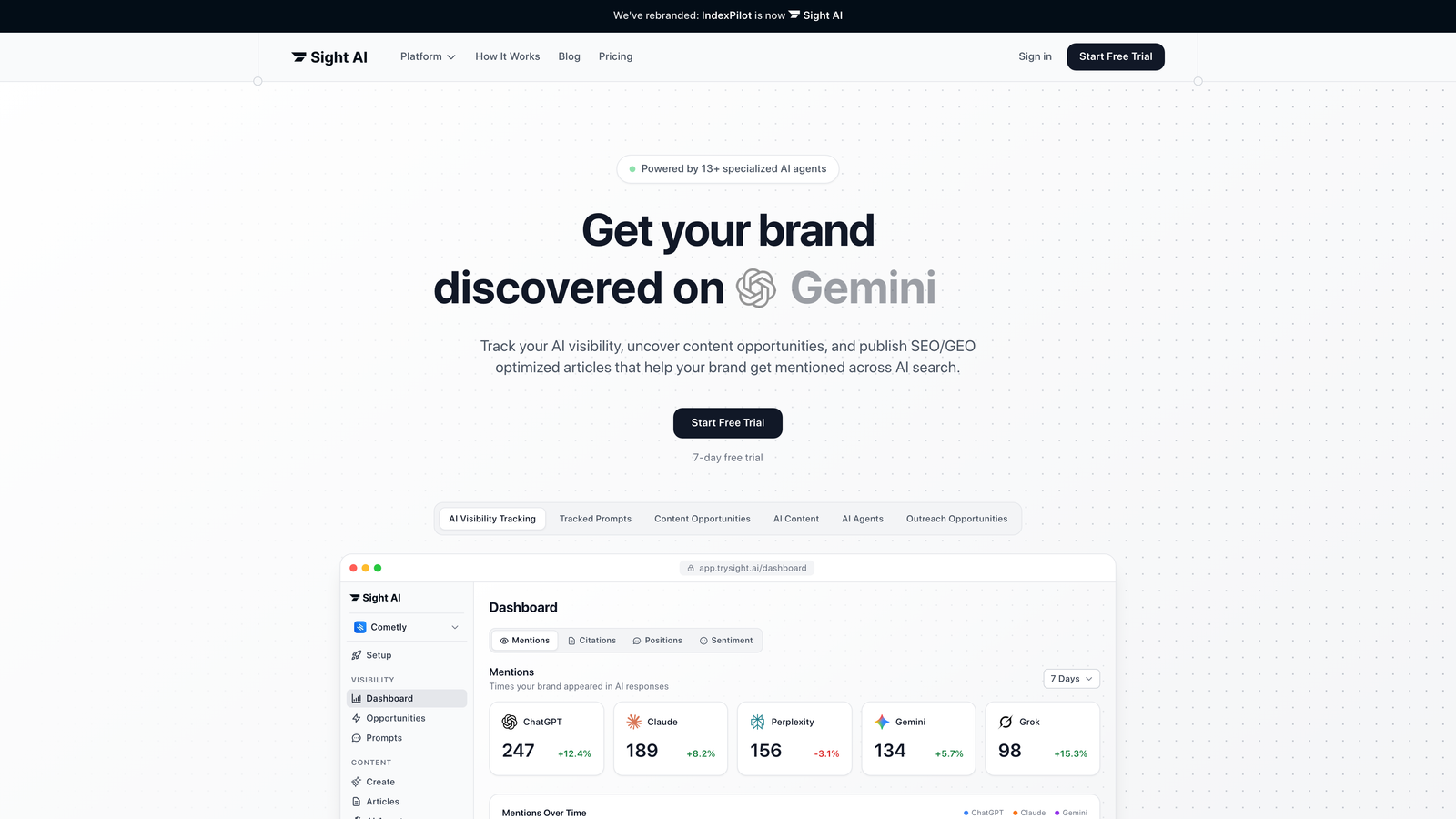

1. Sight AI

Best for: Comprehensive AI visibility tracking and brand mention monitoring across all major AI platforms

Sight AI pioneered the AI visibility monitoring category, offering the most comprehensive platform for tracking how AI models represent your brand across ChatGPT, Claude, Perplexity, and other leading AI platforms.

Where This Tool Shines

Sight AI solves the fundamental problem of AI invisibility with surgical precision. The platform continuously queries major AI models with industry-relevant questions, tracking whether your brand appears in responses, how you're positioned against competitors, and what context surrounds your mentions.

Unlike basic alert systems, Sight AI provides competitive benchmarking—you see exactly how often competitors get mentioned versus your brand, which AI models favor which companies, and how your visibility trends over time. The platform's strength lies in its proactive monitoring approach rather than reactive alerts, giving you the intelligence needed to optimize your AI presence before visibility problems impact revenue.

Key Features & Capabilities

✅ Real-time brand mention tracking: Monitors ChatGPT, Claude, Perplexity, Gemini, and 15+ other AI platforms continuously.

✅ Competitive visibility benchmarking: Shows your share of AI mentions versus competitors with detailed positioning analysis.

✅ Sentiment analysis and context tracking: Captures not just mentions but how AI models describe your brand.

✅ Custom query monitoring: Tracks industry-specific questions your customers actually ask AI assistants.

✅ Historical trend analysis: Reveals visibility changes across AI model updates and algorithm shifts.

✅ Automated alerts: Notifies you when your brand disappears from AI responses or competitor mentions spike.

✅ Citation tracking: Identifies which sources AI models reference when mentioning your brand.

Best For / Ideal Users

Sight AI targets marketing leaders, brand managers, and SEO professionals at companies where AI-driven discovery matters. If your customers research solutions by asking ChatGPT or Perplexity instead of Googling, you need visibility into those conversations.

The platform scales from startups monitoring a handful of key queries to enterprises tracking thousands of industry terms across global markets. Companies in competitive B2B software, professional services, and e-commerce categories find particular value in understanding how AI assistants position them relative to alternatives.

Pricing

Pricing starts with a free tier for basic monitoring, with paid plans beginning around $99/month for comprehensive tracking. Enterprise plans offer custom query volumes, API access, and dedicated support.

2. Arize AI

Best for: ML model performance monitoring and observability for production AI systems

Arize AI delivers enterprise-grade monitoring for machine learning models in production, focusing on performance degradation, data drift, and model accuracy tracking across your entire ML infrastructure.

Where This Tool Shines

Arize excels at catching the subtle ways production ML models degrade over time—the kind of silent failures that don't trigger obvious errors but quietly erode prediction accuracy. The platform's drift detection identifies when incoming data distributions shift away from training data, often the first warning sign before accuracy drops. What sets Arize apart is automated root cause analysis that goes beyond simple alerts. When a model's performance degrades, the platform pinpoints which specific features or data segments are causing problems, saving hours of manual investigation.

For teams running multiple models, Arize's unified observability dashboard provides a single view for monitoring everything from recommendation engines to fraud detection systems. The platform distinguishes between normal variance and genuine problems requiring intervention, reducing alert fatigue while ensuring critical issues get immediate attention.

Key Features & Capabilities

✅ Real-time performance monitoring: Track accuracy, precision, recall, and custom metrics with configurable thresholds.

✅ Automated drift detection: Identifies feature drift, prediction drift, and data quality issues before they impact outcomes.

✅ Root cause analysis: Pinpoints which features or segments drive performance changes, not just that something went wrong.

✅ Model comparison capabilities: A/B test different versions and evaluate champion/challenger models with statistical rigor.

✅ Major platform integration: Works with SageMaker, Databricks, Vertex AI, and other ML infrastructure you're already using.

✅ Explainability features: Shows which inputs influence model predictions, critical for regulated industries.

✅ Custom metrics tracking: Monitor business KPIs beyond standard ML metrics to connect model performance with revenue impact.

Best For / Ideal Users

Arize targets ML engineers, data scientists, and AI product teams at companies running production machine learning systems. If you've deployed models that make business-critical predictions—fraud detection, recommendation engines, pricing optimization, credit scoring—Arize ensures they stay accurate as data and business conditions evolve.

The platform suits mid-market to enterprise companies with dedicated ML teams who need sophisticated monitoring beyond basic alerting. Teams in regulated industries particularly value Arize's explainability features for compliance documentation.

Pricing

Arize offers a free tier for development and small-scale monitoring. Production plans start around $500/month with pricing scaling based on prediction volume and feature complexity.

3. Datadog AI Monitoring

Best for: Unified infrastructure and AI monitoring for teams already using Datadog

Datadog extended their infrastructure monitoring platform to cover AI workloads, offering integrated observability for both traditional infrastructure and AI systems.

Where This Tool Shines

Datadog's competitive advantage lies in unified observability—you monitor AI model performance, API latency, infrastructure costs, and application health in one platform. For teams already using Datadog for infrastructure monitoring, adding AI observability requires minimal setup and provides immediate correlation between model behavior and underlying infrastructure.

The platform excels at cost monitoring for AI workloads, tracking GPU utilization and inference costs alongside performance metrics. Datadog's distributed tracing capabilities shine when debugging complex AI pipelines, showing exactly where latency or errors occur across microservices, model serving layers, and data preprocessing steps.

Key Features & Capabilities

✅ Integrated Monitoring: Track AI models, APIs, infrastructure, and application performance in one unified platform.

✅ LLM Observability: Monitor prompt performance, token usage, and response quality for language model implementations.

✅ Cost Tracking: Real-time monitoring of GPU utilization and inference expenses across AI workloads.

✅ Distributed Tracing: End-to-end visibility across AI pipelines from data ingestion through model serving.

✅ Anomaly Detection: Machine learning-powered identification of unusual patterns and performance issues.

✅ Framework Support: Pre-built dashboards for TensorFlow, PyTorch, Hugging Face, and other popular AI frameworks.

✅ Intelligent Alerting: Customizable thresholds with smart alert grouping to reduce notification fatigue.

Best For / Ideal Users

Datadog AI Monitoring suits engineering teams already invested in the Datadog ecosystem who want to extend monitoring to AI workloads. The platform works best for companies running AI as part of larger application stacks where correlating AI performance with infrastructure health matters.

DevOps teams, SREs, and platform engineers find particular value in the unified approach. If your organization already relies on Datadog for infrastructure observability, adding AI monitoring creates a single source of truth for all system metrics.

Pricing

Datadog pricing follows a usage-based model starting around $15 per host per month for infrastructure monitoring, with AI monitoring features available in higher-tier plans. Enterprise pricing accommodates large-scale deployments with custom integrations and dedicated support.

4. Whylabs

Best for: AI observability with privacy-first architecture and data profiling

WhyLabs provides AI monitoring focused on data quality and model behavior without requiring raw data access, making it ideal for privacy-sensitive industries.

Where This Tool Shines

WhyLabs differentiates through privacy-preserving monitoring that never requires access to raw data or PII. The platform uses statistical profiling to monitor data quality and model behavior, making it viable for healthcare, finance, and other regulated industries where data governance restricts traditional monitoring approaches.

The platform excels at detecting data quality issues before they impact model performance—missing values, type mismatches, distribution shifts, and schema changes all trigger alerts. WhyLabs' lightweight architecture monitors models without adding latency to production systems, and its open-source foundation (whylogs) provides transparency into monitoring methodologies that compliance teams appreciate.

Key Features & Capabilities

✅ Privacy-First Monitoring: Uses statistical profiles instead of raw data access, enabling monitoring in regulated environments.

✅ Comprehensive Data Quality Detection: Identifies missing values, type issues, and schema drift automatically.

✅ Model Performance Tracking: Monitors model behavior without requiring ground truth labels.

✅ Lightweight Integration: Integrates with existing ML pipelines through the whylogs library without performance impact.

✅ Automated Anomaly Detection: Flags both data quality and model behavior anomalies in real-time.

✅ Customizable Monitoring Policies: Aligns with business rules and compliance requirements specific to your industry.

✅ Open-Source Foundation: Provides transparency and extensibility through the whylogs framework.

Best For / Ideal Users

WhyLabs targets data scientists and ML engineers in regulated industries—healthcare, financial services, insurance—where privacy constraints complicate traditional monitoring. The platform suits companies that need robust AI observability but can't share raw data with third-party monitoring services.

Teams valuing open-source transparency and customizable monitoring policies find WhyLabs particularly appealing. If your organization faces strict data governance requirements or operates in environments where PII exposure creates compliance risks, WhyLabs provides monitoring capabilities without compromising data security.

Pricing

WhyLabs offers a free tier for individual data scientists and small teams. Professional plans start around $500/month for production monitoring with advanced features. Enterprise pricing accommodates high-volume deployments and includes dedicated support and custom integrations.

5. Fiddler AI

Best for: Explainable AI monitoring with focus on model transparency and fairness

Fiddler AI combines performance monitoring with explainability features, helping teams understand not just when models fail but why.

Where This Tool Shines

Fiddler's explainability capabilities set it apart in the AI monitoring landscape. When model performance degrades, Fiddler doesn't just alert you—it explains which features are driving problematic predictions and how model behavior has changed over time.

The platform excels at fairness monitoring, detecting bias across demographic groups and helping teams ensure AI systems treat all users equitably. This becomes critical when models make decisions affecting lending, hiring, insurance pricing, or healthcare—contexts where bias can have serious legal and ethical consequences.

Fiddler's what-if analysis tools let you simulate how models would respond to different inputs, invaluable for testing edge cases before they occur in production. You can adjust feature values and immediately see how predictions change, helping you understand model behavior boundaries and identify potential failure modes proactively.

Key Features & Capabilities

✅ Model explainability: Shows feature importance and individual prediction explanations in human-readable format.

✅ Fairness monitoring: Detects bias across demographic groups and protected attributes with automated alerts.

✅ Performance tracking: Monitors accuracy, precision, recall with automated drift detection.

✅ What-if analysis: Simulates model behavior under different scenarios for proactive testing.

✅ Model comparison: Evaluates multiple model versions side-by-side for validation.

✅ Audit trail documentation: Maintains comprehensive logs for compliance and regulatory requirements.

✅ Platform integration: Connects with major ML platforms and model serving infrastructure.

Best For / Ideal Users

Fiddler targets ML teams in regulated industries—banking, insurance, healthcare, lending—where model explainability isn't optional. When auditors ask why your model denied a loan application or priced a policy a certain way, Fiddler provides the documentation and explanations you need.

Data scientists and ML engineers who need to justify model decisions to stakeholders, auditors, or regulators find Fiddler's transparency features essential. The platform suits companies where fairness and bias detection are critical business requirements, not just nice-to-have features.

Risk management teams appreciate Fiddler's ability to document model behavior over time, creating audit trails that satisfy compliance requirements while maintaining production monitoring capabilities.

Pricing

Fiddler offers custom enterprise pricing based on model volume and feature requirements. Contact their sales team for specific pricing tailored to your deployment scale and compliance needs.

6. Evidently AI

Best for: Open-source ML monitoring with customizable metrics and self-hosted deployment

Evidently AI provides open-source ML monitoring tools with an optional cloud platform, giving teams flexibility to self-host or use managed services.

Where This Tool Shines

Evidently's open-source foundation gives teams complete control over monitoring implementation and customization. Unlike black-box commercial platforms, you can inspect monitoring logic, customize metrics to match your specific use case, and extend functionality through code.

The platform excels at generating interactive monitoring reports that data scientists can share with stakeholders—visual dashboards showing data drift, model performance, and prediction distributions without requiring technical expertise to interpret. These HTML reports work beautifully for asynchronous communication, letting you send comprehensive monitoring insights via email or Slack without requiring dashboard access.

Evidently's test suite approach lets you define monitoring as code, integrating quality checks directly into CI/CD pipelines. You write tests that specify acceptable drift thresholds, performance requirements, and data quality standards, then run them automatically before deploying model updates. This catches issues during development rather than in production.

Key Features & Capabilities

✅ Open-source monitoring framework: Full code transparency with ability to customize any monitoring logic.

✅ Interactive HTML reports: Shareable visual dashboards for non-technical stakeholders.

✅ Test suite approach: Define monitoring as code for CI/CD pipeline integration.

✅ Comprehensive drift detection: Track data drift, prediction drift, and model performance changes.

✅ Custom metric definitions: Create monitoring metrics matching your specific business requirements.

✅ Flexible deployment options: Self-host for complete control or use managed cloud platform.

Best For / Ideal Users

Evidently suits ML engineers and data scientists who value open-source transparency and want customization flexibility. Teams with strong engineering capabilities who prefer self-hosted solutions find Evidently ideal.

The platform works particularly well for companies with unique monitoring requirements that commercial platforms don't address out-of-the-box. If your use case demands custom drift metrics, specialized performance measurements, or integration with proprietary systems, Evidently's open-source nature makes these modifications straightforward.

Organizations where data governance requires on-premise deployment appreciate Evidently's self-hosted option. You maintain complete control over where monitoring data lives and how it's processed, critical for regulated industries or companies with strict data residency requirements.

Pricing

Evidently's open-source framework is completely free for self-hosted deployment. The managed cloud platform offers a free tier for small teams, with paid plans starting around $100/month for production monitoring and advanced features.

7. New Relic AI Monitoring

Best for: APM-integrated AI monitoring for teams using New Relic's observability platform

New Relic extended their application performance monitoring platform to cover AI workloads, offering integrated observability for modern AI-powered applications.

Where This Tool Shines

New Relic's strength lies in correlating AI performance with application behavior and user experience. The platform automatically traces AI model calls through your application stack, showing how model latency impacts page load times or API response rates.

For teams running AI-powered features within larger applications, New Relic connects the dots between model performance and business metrics like conversion rates or user engagement. The platform's anomaly detection applies across both traditional application metrics and AI-specific measurements, using the same alerting infrastructure you've already configured.

New Relic's distributed tracing shines when debugging complex AI pipelines, visualizing exactly how requests flow through preprocessing, model inference, and post-processing steps. This visibility proves invaluable when latency spikes or errors occur—you can pinpoint whether issues originate in your AI model, data preprocessing, or downstream services.

Key Features & Capabilities

✅ Integrated AI and Application Monitoring: Track AI model performance alongside traditional APM metrics in a unified observability platform.

✅ Distributed Tracing: Visualize AI model calls within application request flows, showing exactly how inference impacts overall response times.

✅ LLM Monitoring: Track prompt performance, token costs, and response quality for large language model integrations.

✅ AI-Powered Anomaly Detection: Automatically identify unusual patterns across all metrics using machine learning algorithms.

✅ Custom Dashboards: Correlate AI performance with business KPIs, creating views that matter to your specific use case.

✅ Intelligent Alerting: Real-time alerts with correlation and noise reduction, preventing alert fatigue while catching genuine issues.

✅ Framework Integration: Connect with popular AI frameworks and model serving platforms through pre-built integrations.

Best For / Ideal Users

New Relic AI Monitoring targets engineering teams already using New Relic for APM who want to extend observability to AI features. The platform suits companies where AI is embedded within larger applications rather than standalone systems.

DevOps teams, SREs, and full-stack engineers who need to understand how AI performance impacts overall application health find New Relic's integrated approach valuable. If you're already paying for New Relic and adding AI capabilities to your product, this becomes the natural monitoring choice.

Pricing

New Relic offers a free tier with 100GB of data ingest per month. Paid plans start around $99/month per user with usage-based pricing for data ingestion and retention. AI monitoring capabilities are included in standard plans.

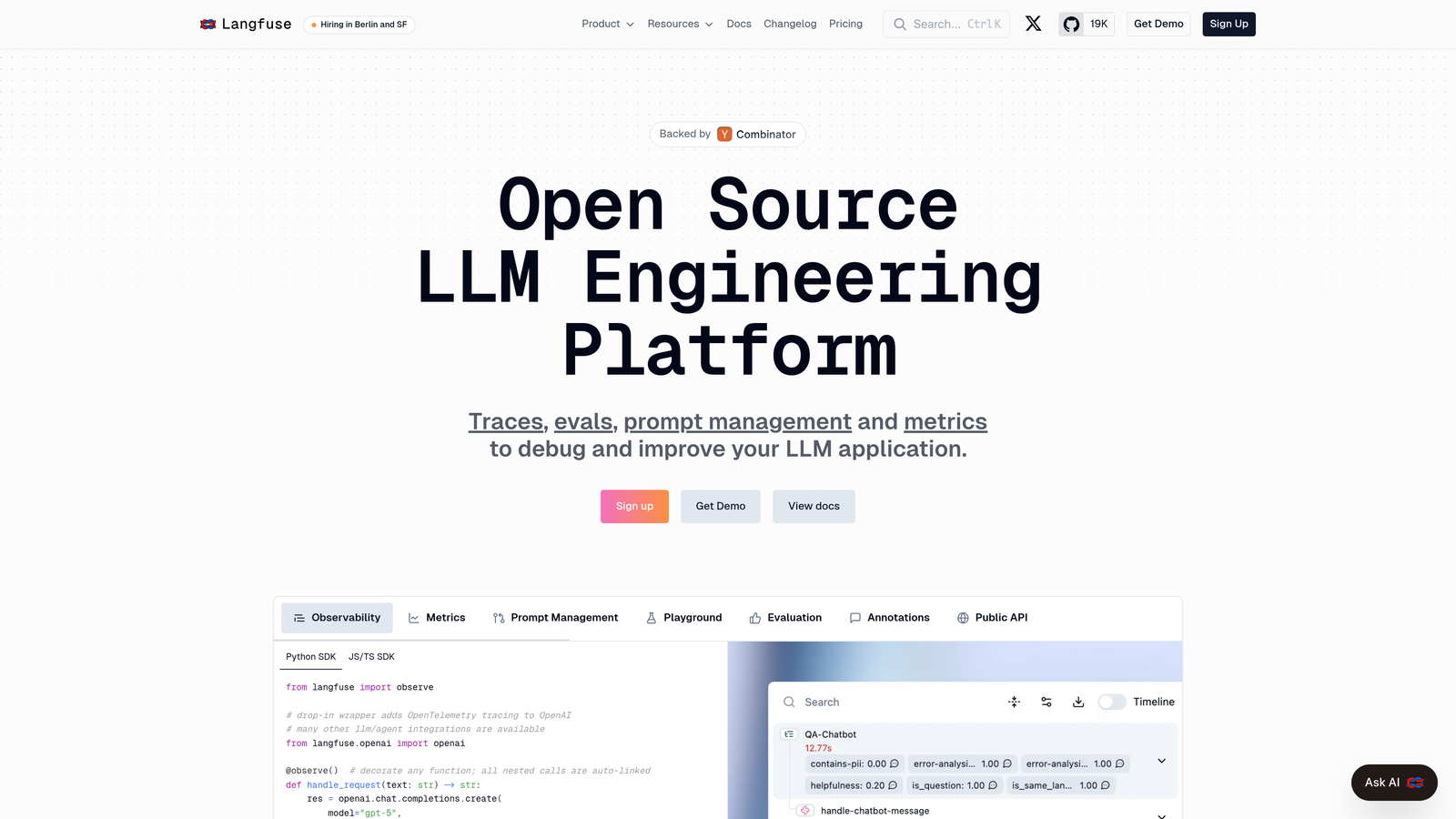

8. Langfuse

Best for: LLM application monitoring and prompt engineering optimization

Langfuse specializes in monitoring LLM-powered applications, providing detailed observability for prompt performance, token usage, and response quality across your AI product stack.

Where This Tool Shines

Langfuse solves the specific observability challenges that emerge when you build products powered by large language models. The platform captures every LLM interaction—prompts, responses, token counts, latency—and organizes them into traceable sessions that show exactly how your AI features perform in production. Unlike general-purpose monitoring tools, Langfuse understands the nuances of LLM applications: tracking prompt versions, measuring response quality, monitoring token costs, and identifying which prompt variations deliver the best results. The platform's prompt management capabilities let you version and test different prompts systematically, turning prompt engineering from an art into a data-driven discipline.

What sets Langfuse apart is its focus on the complete LLM development workflow. You can trace complex chains where one LLM call feeds into another, monitor retrieval-augmented generation (RAG) pipelines showing which documents get retrieved, and analyze user feedback to understand which AI responses actually satisfy users. This end-to-end visibility helps teams optimize not just individual prompts but entire AI interaction flows.

Key Features & Capabilities

✅ Comprehensive LLM tracing: Captures every prompt, response, token count, and latency metric across your application.

✅ Prompt versioning and management: Track different prompt variations, compare performance, and deploy optimized versions systematically.

✅ Token cost tracking: Monitor spending across different models and identify expensive queries that need optimization.

✅ User feedback integration: Collect and analyze user ratings on AI responses to measure real-world quality.

✅ RAG pipeline observability: Track document retrieval, context injection, and how retrieved information impacts responses.

✅ Multi-model support: Works with OpenAI, Anthropic, Cohere, and other major LLM providers in a unified interface.

✅ Session-based tracing: Group related LLM calls into sessions showing complete user interaction flows.

Best For / Ideal Users

Langfuse targets product teams, AI engineers, and developers building LLM-powered features into their applications. If you're shipping AI chatbots, content generation tools, AI assistants, or any product where LLM quality directly impacts user experience, you need this level of observability. The platform suits startups iterating rapidly on AI features as well as established companies scaling LLM applications to millions of users.

Pricing

Langfuse offers a generous free tier for development and small-scale production use. Paid plans start around $99/month for growing teams with higher usage volumes. Enterprise plans provide dedicated infrastructure, custom integrations, and SLA guarantees.

Making the Right Choice

The right AI monitoring tool depends entirely on what you're actually trying to monitor. If your primary concern is brand visibility across AI platforms—whether ChatGPT mentions you when customers ask about solutions in your space—Sight AI delivers the most comprehensive tracking with competitive benchmarking that shows exactly where you stand. For teams running production ML models who need to catch performance degradation before it impacts business outcomes, Arize AI and Fiddler AI offer the depth of observability required for mission-critical systems.

Teams already invested in broader observability platforms should consider Datadog or New Relic, which integrate AI monitoring into existing infrastructure tracking. If you're building LLM-powered applications and need detailed prompt performance analysis, Langfuse provides specialized capabilities that general-purpose tools miss. For privacy-sensitive industries or teams valuing open-source transparency, Whylabs and Evidently AI offer monitoring without compromising data governance requirements.

The AI visibility gap won't close on its own—every day your brand goes unmonitored in AI responses represents missed opportunities and potential misinformation spreading unchecked. Whether you're tracking external brand mentions or internal model performance, the cost of not monitoring far exceeds the investment in the right tools. Start tracking your AI visibility today and gain the intelligence needed to optimize your presence across the AI platforms reshaping how customers discover solutions.